By Adam Benjamin

Illustrations by Daniel Liévano

When certain events or behaviors are very consistent, we sometimes say, “You can set your watch by it.” The implication, of course, is that many things in this world are chaotic and unpredictable, but one of the few dependable things is the ticking of a clock. If we can be confident in anything, it’s that seconds will continue to tick by, 24 hours a day, 365 days a year.

Well, except we add a 366th day every fourth year.

Unless it’s the turn of a century … but only if that century isn’t divisible by 400.

Those things all have to do with small rounding errors related to how long it takes our planet to orbit the sun, but they illustrate a broader point: Time is not quite as reliable or consistent as we’d like to think.

It’s a little bit … finicky.

One of those idiosyncrasies is something called “leap seconds.” Every so often, our official clocks get a little out of sync with what they’re trying to measure, and we have to add an additional second to keep things accurate. That 61st second is known as a leap second. They’re similar to leap years in the sense that they’re a unit of time that we add to our clock, but in many ways they’re more complicated and much more disruptive than the occasional Feb. 29. They’re a bit unpredictable by nature, and that makes them a natural foe of our contemporary dependency on precise timing.

Other shifts in time—like a leap year or daylight saving time—are consistent, scheduled events. They’re planned adjustments. Leap seconds are corrections, which means they’re more of a hassle. Daylight saving offers us a good example: While losing an hour in the spring is a bit unpleasant for most, it’s a planned adjustment, which means you can go to bed an hour earlier than usual to avoid that time being taken out of your nightly rest. Leap seconds, on the other hand, are more like getting ready to leave work when the clock hits 5 o’clock, but then watching your boss turn it back to 4 because the day was moving a little too quickly. It’s a small adjustment in the grand scheme of things, but it can be very disruptive—especially if you had plans immediately after work.

In fact, leap seconds are so disruptive that panels of people are debating what to do about them. Do we keep them, and face the challenges that come with their unpredictability? Or do we get rid of them, and embrace whatever timekeeping discrepancies arise? As you might expect for something as complicated as measuring time, there are no easy answers. The discussion about leap seconds continues as groups try to find the most effective solution to their disruption.

But in order to understand the future of time (or timing), we have to rewind a little bit. Just a few billion years ago.

A Slowly Spinning Planet

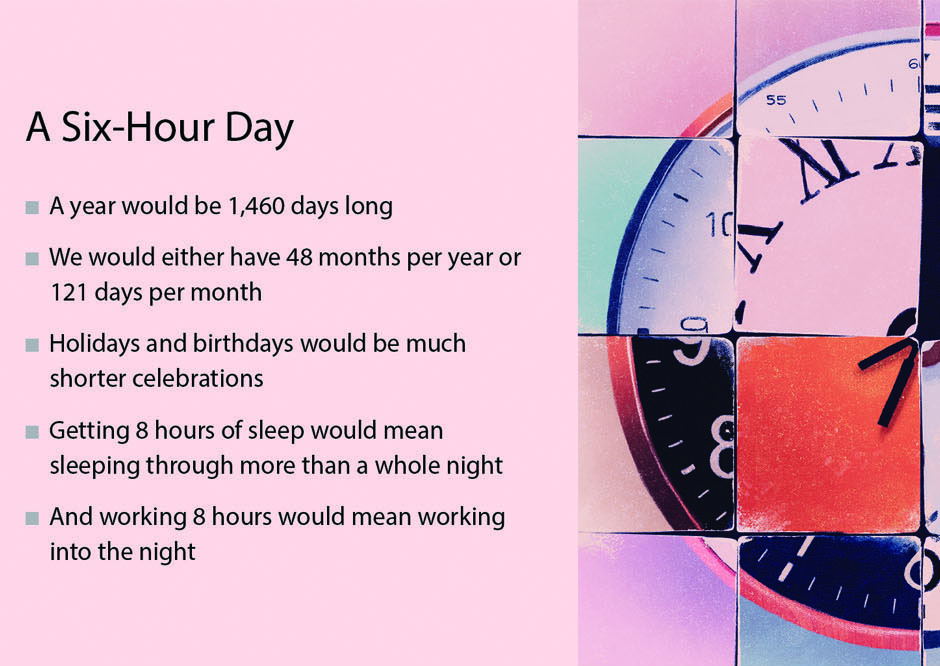

We’re all very accustomed to 24-hour days, but they’re a bit of a recent phenomenon in the history of our world. During Earth’s early days roughly 4.5 billion years ago, an entire day-and-night cycle was closer to six hours.[1]

Michael Richmond, Ph.D., an astronomer at the Rochester Institute of Technology, explained how we can measure the length of prehistoric days: “One method relies on the fine structure of coral polyps, the tiny individual creatures which make up coral reefs. We can see very fine layers in the walls of these structures, which correspond to the material added each day, as well as periodic variations in these layers, which indicate annual changes. In corals living today, there are an average of 365 little layers within each annual band, while fossils of corals which lived 350 million years ago show about 385 little layers per band.” More layers per band means more rotations (i.e., days) per year.

Understanding why the days have gotten longer requires a bit of an astronomy lesson.

Days, of course, are a measure of the Earth’s rotation—specifically, the amount of time it takes to complete one full rotation on its axis. The daytime portion happens when our location rotates in view of the sun, and we experience nighttime when our portion of the world faces away from the sun. However, there are other forces at play that influence the amount of time it takes for those things to happen, and over a few billion years, they’ve gradually increased the amount of time it takes our planet to spin around.

The main culprit? The moon. According to people who study really big space objects, Earth’s moon formed early in the life of our solar system (some 4.5 billion years back), when something roughly Mars-sized knocked into our planet and sent a sizable chunk of debris catapulting into space.[2] Earth’s gravity caught the moon, though, keeping it from flying out of orbit and tugging at the moon’s rough exterior. That constant tugging also slowed down the moon’s rotation until it became what scientists call “tidally” locked—meaning that the same part of the moon is always oriented toward the Earth, like two ice skaters locking eyes as one spins around the other.

While Earth’s gravity is what keeps the moon close, the moon has its own gravity, which, along with a little bit from the sun, pulls at the most tractable part of the Earth: its oceans. This is where things really start to get complicated: The resulting gravitational tug is responsible for the phenomenon of tides, with a couple of important nuances. First, the tidal bulge created by the moon’s gravity actually occurs in two locations — one nearer the moon, and one farther away from it. While it might seem like the bulge would exist only on the near side of the Earth, gravity and inertia interact in a way that creates a similar bulge on opposite sides of the planet.

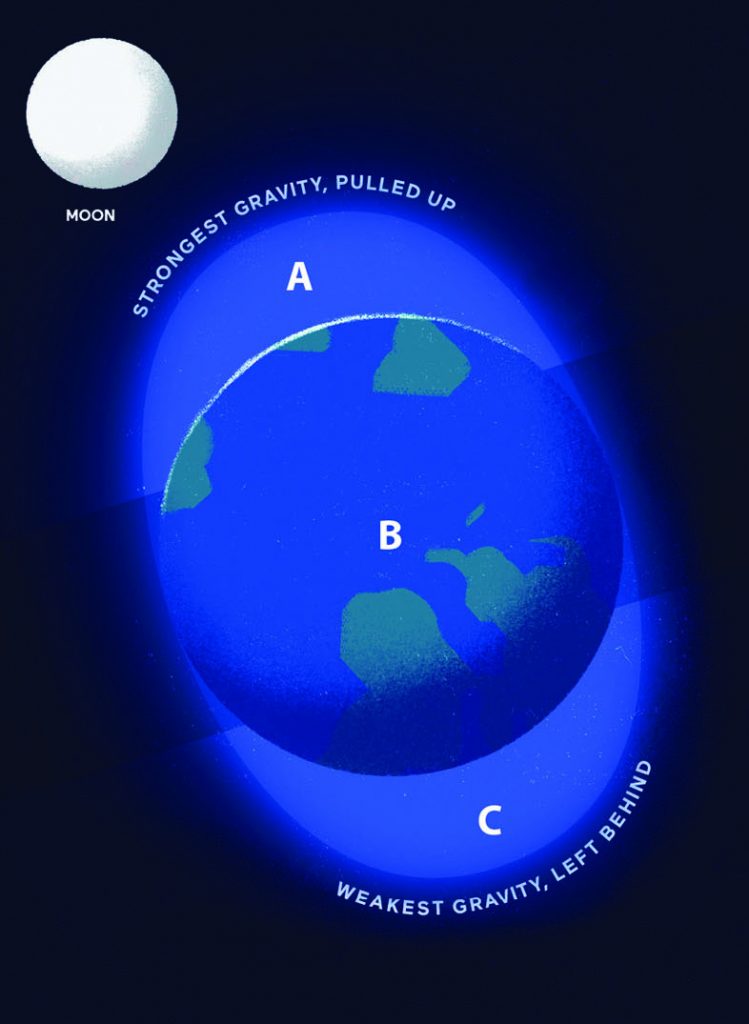

A Physics Professor Explains Tidal Bulge

“Consider three objects: a little blob of water, A, which is on the Moon’s side of the Earth, a chunk of the Earth’s core near its center, B, and a second blob of water, C, which is on the far side of the Earth, away from the Moon.

“The distance between the blob of water A and the Moon is smaller than the distance between the Earth’s core and the Moon, so the gravitational pull on A is larger than the pull on the core. That means that the Moon pulls A harder than it pulls the core, so A and the core are stretched away from each other. The net result: water on the Moon’s side of the Earth forms a little bulge which moves away from the core.

“Now consider the gravitational forces on the core and the blob C, which lies on the opposite side the Earth. This time, it’s the core which lies closer to the Moon, and so it is the core which experiences the larger gravitational force. Once again, there’s a stretch, but this time, it’s the core which is pulled away from the blob of water. As a result, the poor ocean on the far side of the Earth is left behind the solid body of the Earth, and forms a little bulge; not because the water is being pulled away from the Earth, but because the Earth is being pulled away from the water.”

—Michael Richmond, Astronomer,

Rochester Institute of Technology

The second nuance deals with the interaction between tides, the moon, and Earth’s rotation. Because the planet is constantly spinning, the nearer peak of the tidal bulge is actually a little bit ahead of the moon’s orbit around the Earth, rather than directly below the moon. As a result, the moon’s gravity pulls against the planet’s rotation, creating a sort of friction known as “tidal drag.” Imagine that you’re riding a carousel, and you have a friend (let’s call her “Luna”) who’s not on the carousel and is chasing you. She wants to catch up to where you are so that she’s always parallel to you, but the carousel got a bit of a jump-start, so she’s a little bit behind you as you spin around. Your friend reaches out, grabs your arm, and starts pulling. You’re buckled in, so you don’t go flying off the carousel, but your friend’s pulling does slow the carousel down a tiny bit. In this scenario, you are the tide and Luna is, of course, the moon. That constant pulling very slightly slows down the Earth’s rotation.

It’s imperceptible to us over the course of a year or even a century, but over billions of years those forces have quadrupled the amount of time it takes the Earth to rotate.

In case any of that was too straightforward, a variety of other factors also contribute to the speed of rotation, either speeding it up or slowing it down. Some of those factors include: seasonal wind speeds, tide cycles, large storms, and differences in the rotational speed of the Earth’s outer core compared to the rest of the planet.[3] Because there are so many factors affecting that speed differently, the planet’s rotation decelerates at a variable rate. More plainly: Some years it slows down more than others. That’s why leap seconds are corrections, rather than planned adjustments: They’re unpredictable and must be made in response to measured differences.

But exactly what corrections are we making? Let’s jump back into the modern era to look at leap seconds in more detail.

Atomic Clocks and Technological Mishaps

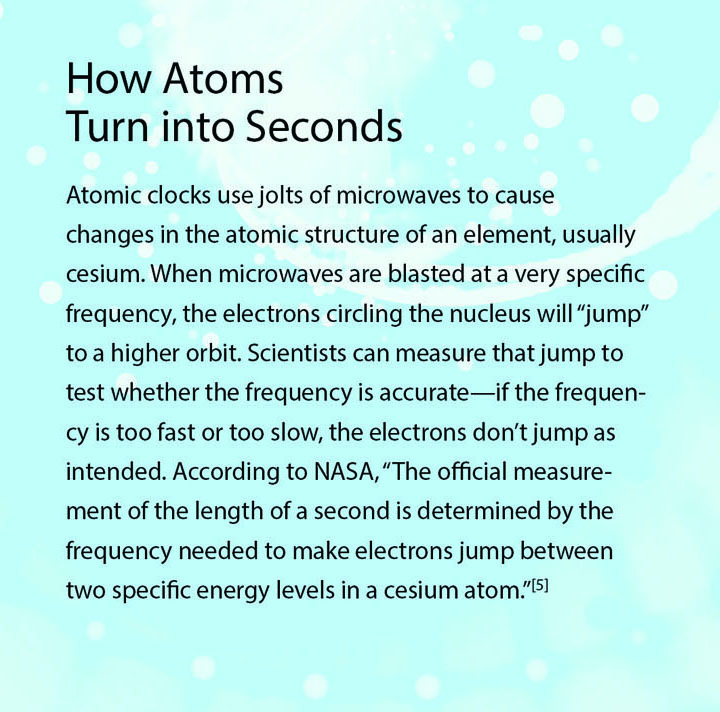

Leap seconds are necessary because the very official clocks we started making in the late 20th century are extremely precise—far more precise than the rotation of the Earth, which is what those clocks are trying to help us quantify. The most precise clocks on the planet are known as atomic clocks because they use the vibrations of atoms to measure time. Those measurements would stay accurate for hundreds of millions of years … if the Earth’s rotation were perfectly consistent.[4] But it’s not. So, for the purposes of representing the length of a day, atomic clocks are a little too precise.

One approach to solving this issue has been the creation of multiple time systems. Using atomic clocks gives us “atomic time,” usually indicated by the abbreviation TAI (from the French phrase meaning “international atomic time”). There’s also Global Positioning System (GPS) time, which uses effectively the same measurement but from a different starting point. Neither of these clocks incorporates leap seconds, which means they differ from other measures of time like Coordinated Universal Time (UTC), which adds leap seconds to atomic time to more accurately reflect the speed of Earth’s rotation. The clock most people are familiar with uses local time, which adjusts UTC according to your regional time zone.

Without leap seconds, local time would diverge—extremely slowly—from the observable time of day. To the average person, the difference would be imperceptible even hundreds of years from now. But thousands of years in the future, things would feel a little off. Noon wouldn’t be the sun’s highest point anymore—it would happen during the morning. Leap seconds ensure that our clocks match the observable time.

However, that goal might not be worth the trouble that leap seconds create.

Today, many industries depend on precise timing systems. Such systems can be majorly disrupted by the insertion of leap seconds. Douglas Arnold, Ph.D., principal technologist at Meinberg-USA offered some perspective on the challenges.

“In theory, leap seconds can be handled by software … [but] in practice, problems arise because timing is distributed among a large number of devices in a network all designed by different people. So there are inconsistencies with when pending leap second events are announced, how long the information takes to propagate through the network, and what happens if there is a missing or extraneous leap second notification which is later corrected.”

In other words, there are a variety of factors that affect the implementation of leap seconds across different systems, so it’s difficult to perfectly synchronize them. For many industries, that’s a huge problem. Arnold gave us telecommunications as one example: “Telecommunications requires precise time at all cellular base stations so that mobile phones can operate in the presence of overlapping base stations without the base stations interfering with each other. If they do interfere with each other, then calls will be dropped and data will fail to download. In other words, your cell phone is useless without precise timing.”

And the risks extend to plenty of other industries, including power, finance, broadcasting, information technology, and more. Even industries like manufacturing are affected. According to Arnold, “Manufacturers use precise timing to coordinate the activities of robotics for automated assembly and testing. Failures in timing can result in work stoppage damage to equipment and injuries to workers.”

The Smeared Second

Among less dire consequences, leap seconds can also lead to websites crashing. When a leap second was inserted in June 2012, sites like Reddit and Gawker went down. According to a report from Wired, those crashes were largely the result of server overloads when systems failed to properly adapt to the adjustment.[6] Leap seconds are added inconsistently (we had eight in the ’90s, but only two in the following decade, for example), so the systems that are designed to incorporate them don’t see routine testing and usage. Those systems are less insulated against failure because they aren’t as finely tuned as processes that have to run every day.

The inconsistency and unpredictability of leap seconds makes them surprisingly hard to tackle from a technological standpoint. Other time adjustments are much more defined. Take daylight savings, for example: Even though it messes with our clocks twice a year, those changes happen at the same time every year (the second Sunday in March and the first Sunday in November), and they don’t affect the actual measure of time. Instead, they simply adjust what time is being displayed.

Leap years are a closer comparison, because they involve a unit of time being inserted into the year. We cram an entire extra day into the year, and yet technology seems to handle leap years without issue. But again, the difference is the systematic nature of leap years: We have one every fourth year, except for the first three of four centennial years. Those rules can be programmed into calendars, which means, from the computer’s perspective, they’re all part of the plan.

Leap seconds, on the other hand, seem to come out of nowhere, which is why multiple systems went haywire in 2012. However, fewer outages were reported in the wakes of the 2015 and 2017 leap seconds, thanks in part to newer techniques for implementing these adjustments. One of these methods attempts to “smear” the extra second over the course of the day, rather than inserting a full second at one moment.

Google pioneered this technique in 2008, and wrote a blog post a few years later detailing the logic and the methodology:

“We modified our internal NTP [network time protocol] servers to gradually add a couple of milliseconds to every update, varying over a time window before the moment when the leap second actually happens. This meant that when it became time to add an extra second at midnight, our clocks had already taken this into account, by skewing the time over the course of the day. All of our servers were then able to continue as normal with the new year, blissfully unaware that a leap second had just occurred.”[7]

Those smaller adjustments essentially mask the larger adjustment of adding a whole second. Imagine that you were asked to do 60 push-ups tomorrow. You could try to do those 60 push-ups all at once, but a lot of people will only make it partway through before the muscles in their chest, shoulders, and torso become too fatigued to continue. So perhaps you opt for doing 5 push-ups every hour for 12 hours. Sure, those last few sets are still going to be difficult, but each set is less difficult and disruptive than a sudden burst of 60 push-ups. Smearing seconds follows similar logic.

The Future of Leap Seconds

Given how disruptive leap seconds can be, many people have questioned whether we should continue them. Atomic clocks allow us to be extremely precise — we can measure in nanoseconds, billionths of a second — so why do we need to constantly adjust our official clocks to something as erratic as the Earth’s rotation?

In 2015, Quartz published an article titled “The origin of leap seconds, and why they should be abolished,” arguing that the ostensible purpose of adding leap seconds is out of touch with our actual needs. “At this rate, 100 years from now we will have let the earth deviate just over a minute, and it will be 5,800 years before clocks deviate an hour—a shift that countries with daylight saving time currently put up with twice every year. That’s further in the future than the invention of writing is in the past.”[8]

The World Radiocommunications Assembly, which meets every three or four years, has been debating what to do with leap seconds for several sessions. Vincent Meens, head of the Frequency Bureau at CNES—France’s equivalent of NASA—had this to say in 2015: “You have to realize that there were problems with almost every leap second in the last 40 years, although not as important and publicized as in 2012. Stopping the insertion of the leap second will mean saving time and money on the software updates and also removing the risks associated with inserting the leap second.”[9]

In that same interview, he said. “It’s very important that we [make] a decision on this issue [when the assembly meets] in November 2015. We have been working on this topic for many, many years.” Meens laid out the four options the assembly was considering for that meeting:

- Stop inserting leap seconds into UTC

- Maintain UTC and create another continuous time scale based on atomic time

- Keep UTC, but broadcast the difference from atomic time alongside it

- Continue to consider the implications before making a decision

So, what did the assembly decide? It apparently wanted to continue working on the topic for many years, deferring the decision until 2023.[10]

To be fair, any decision will carry some degree of consequences. The first option carries perhaps the most straightforward implications: Simply using atomic time would mean that our clocks would eventually deviate from the rotation of the Earth, leading to about a minute’s worth of time slippage every century. But it would also eliminate all of the trouble that systems have when leap seconds are added. Advocates of this method have also allowed for the option of adding leap minutes or hours, rather than seconds, which would reduce how often we would need to adjust our time systems.

Creating a second continuous time scale is a bit harder to evaluate. As with any new system, there are potential issues with implementation and adoption: Would every country accept the new scale? Or would some countries value the accuracy to the observable day over the precision of atomic time? Perhaps governments would be allowed to choose their preferred time scale, but then how do we address the discrepancies between those two pools? The assembly would also need to consider the costs associated with implementing a new time scale—the price that would come with development, programming, and maintenance.

The third option, keeping UTC and adding the difference from atomic time, seems to be more of a middle ground. It dodges the problems of creating a new time scale, opting instead for issues with modifying an existing one. This option does the least to alleviate the technical pain points created by leap seconds, asking companies and other organizations to continue finding solutions for these unpredictable additions to our clocks.

So, why the eight-year delay on making a decision? Each of these options comes with its own benefits and drawbacks, which means a vote for any one method is a vote for some degree of difficulty. Each person in the assembly is bringing a different perspective to the issue, and it’s likely difficult to get a room full of people to agree on which difficulties they prefer. Think about how much trouble it can be for a small group of people to agree on pizza toppings—a relatively low-stakes issue in the grand scheme of things. Now imagine a whole assembly of people trying to come to a consensus about whether we should take pepperoni off the order, or just order a second pizza, or order the usual and just let people pick off the pepperoni if they don’t want it. When you factor in the global scale of the consequences, the assembly’s slow, deliberate pace is more understandable.

Ticking On

The World Radiocommunications Assembly won’t meet for another three years, meaning it’s quite possible for us to have another leap second before we figure out what to do with the problem. We could also see the WRA defer the decision until the next conference three or four years later. In either case, organizations need to understand the issue and have clear systems in place to minimize its impact (like smearing the leap seconds).

From far enough away, though, leap seconds and the phenomena behind them are rather fascinating. They exist because we created a concept to describe an experience (days), and in our attempts to measure that experience with more precision, we invented a problem. Ultimately, leap seconds are reminders that time isn’t the omnipresent force we tend to imagine it as.

It’s just a way for us to measure our collective trip through the solar system.

Adam Benjamin is an editor and writer living in Seattle, Wash., and has previously written about game design, filmmaking insurance, and artificial intelligence. He would like to thank Drs. Arnold and Richmond for their contributions to this piece.

References

[1] “Why do we have a summer solstice anyway?” Vox; Jun. 21, 2019. [2] “NASA scientist Jen Heldmann describes how the Earth’s moon was formed”; Solar System Exploration Research Virtual Institute; accessed March 25, 2020. [3] “No, this winter solstice wasn’t the longest ever. Scientists explain what we got wrong.” Vox; Dec. 23, 2014. [4] “How the U.S. Built the World’s Most Ridiculously Accurate Atomic Clock”; Wired; Apr. 4, 2014. [5] “What Is an Atomic Clock?” NASA; June 19, 2019. [6] “The Inside Story of the Extra Second That Crashed the Web”; Wired; July 2, 2012. [7] “Time, technology and leaping seconds”; Google Blog; Sep. 15, 2011. [8] “The origin of leap seconds, and why they should be abolished”; Quartz; June 29, 2015. [9] “ITU INTERVIEWS: Vincent Meens on the Leap Second”; YouTube; June 26, 2015. [10] “Leap-second decision delayed by eight years”; Nature; Nov. 20, 2015.