By Randall Stevenson

At some time in every actuary’s career, one will observe human behavior that from an actuarial or economic perspective defies the theory that people will behave to promote their self-interest. How often have we observed people sell their benefit entitlements for substantially less than the economic value of what they would otherwise receive?

It happens often enough that industries have emerged to allow people to “cash out” of structured settlements, future insurance payments, streams of annuity payments, and even their paychecks. When the deferred retirement option plan (DROP) was introduced, it allowed employees eligible for retirement to freeze their defined benefit pension, continue working for two or three years, and receive a lump sum at retirement equal to the benefits they would have been paid for those years. In pricing the plan, the initial assumption was people would behave in their best economic interest, and the result was DROP would have a modest cost because it provided an additional option to the plan participant. As experience unfolded, however, offering DROP produced surprising savings for the retirement plans.

People often do not behave in their best economic interest—not only on an individual basis, but even on a macroeconomic scale. Actuarial predictive analytics is emerging from a nascent actuarial exercise to a full field of practice because we are recognizing that predicting and accurately modelling human behavior is playing an increasingly significant role in actuarial science.

Early in actuarial training, one learns the feasibility of an insurance product is based on the policyholder’s utility of expected wealth being greater than the expected utility of wealth when risk is introduced; that is, U(E(W))>E(U(W)). However, this fails to explain why a person would buy life insurance and at the same time a lottery ticket. To understand and model this seemingly contradictory behavior, we need to improve our understanding of cognitive science, the study of thought, creation of meaning, and assignment of values upon which decisions are based.

In the 17th century, Swiss mathematician Daniel Bernoulli approached the St. Petersburg paradox* by mathematically modelling utility instead of money to describe human behavior. The eventual failure of utility theory to consistently predict human behavior resulted in psychology and economics taking separate developmental paths. In the 20th century, two psychologists, Amos Tversky and Daniel Kahneman, studied how people make choices. Their results defied classical utility theory, but also advanced our understanding of the psychology of decisions and revolutionized economics. From their work, the field of behavioral economics evolved and reunited the paths of economics and psychology. The body of their work eventually garnered a Nobel Prize in Economics, although the prize was awarded only to Kahneman because Tversky had died. They demonstrated the failure of utility theory through simple experiments, such as this one:

People were given $1,000 and offered the option of receiving an additional $500 or taking a 50-50 chance of receiving an additional $1,000 or $0. Most people chose the $500.

However, when people were given $2,000 and a 50-50 chance of losing $1,000 or $0 against the option of paying $500 to avoid the risk, most people opted to retain the risk. According to utility theory, the first scenario places a higher utility on $1,500 than the expected utility of a 50-50 chance between $1,000 and $2,000. The second scenario places a lower utility on the $1,500 than the expected utility of the 50-50 chance between $1,000 and $2,000.

They expanded their study using different probabilities and found for high-probability events, people tend to be risk-averse on gains and risk-seeking on losses; however, for low-probability events, people tend to be risk-seeking on gains and risk-averse on losses. This partially explained why the same person would buy insurance and a lottery ticket. People are loss-averse rather than risk-averse. Their seminal works: Judgment Under Uncertainty: Heuristics and Biases and Prospect Theory: An Analysis of Decision under Risk were first published in the 1970s. Cognitive science is now being used in politics, medicine, public policy development, professional athlete evaluations, marketing, legal judgments, military strategy, finance, statistical analysis, and many other fields.

Plato, and later Spock of Star Trek fame, presented the conscious mind as consisting of emotion and reason, with the two forces working in opposition. Both Plato and Spock advocated the elevation of reason through the suppression of emotion. In reality, our brains tend to operate using an automatic system (where the emotions and intuition reside) and calls upon a system involving mental effort when it is surprised or does not have an automatic response. The system involving mental effort is where logic, arithmetic, and self-control reside. They do not oppose one another, but work together to minimize effort and optimize performance. Kahneman refers to these as System 1 and System 2, but for simplicity I will refer to the automatic system as “intuition” and the effortful system as “reason.”

We have since discovered that the good feelings that come from the brain’s automatic release of dopamine for being correct and the lack of good feelings associated with the brain withholding the release of dopamine when we make an error are vital to our learning process. Without the emotional reward for being correct, we would not develop reasoning skills. Similarly, reasoning often tells the brain when to release dopamine.

Reason and emotion rely upon each other, and both are needed for the proper development of human consciousness.

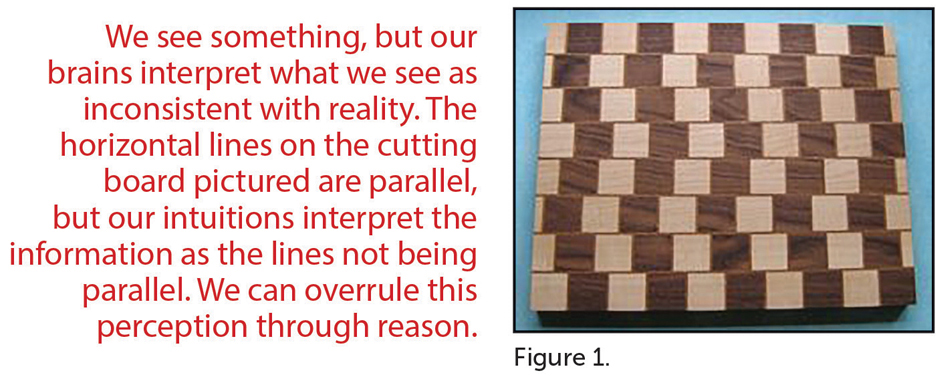

However, there is also a part of our mental development that defies reason while being divorced from emotion. An excellent example is an optical illusion. We see something, but our brains interpret what we see as inconsistent with reality. The horizontal lines on the cutting board pictured in Figure 1 are parallel, but our intuitions interpret the information as the lines not being parallel. Even after we use a ruler to verify the lines are the same distance apart, our intuitive brains still interpret them as being sloped toward or away from each other. We can overrule this perception through reason. The bad news is that because our intuition can overpower reality in such a simple case, it may also do so in more complex circumstances. The good news is that through reasoning we can decide to ignore the falsehoods provided by our intuition, but only if we can identify them.

The ability to recognize and project patterns is a key factor in the ability of humans to survive and thrive. Testing this ability is a common component used in measuring human and higher animal intellect. Consider the importance of the recognition of the pattern of seasons to early man, or even something as basic as the pattern of night and day. As part of our survival instinct, our brains seek patterns and assume correlations. The brain’s need to find patterns often overrides reason and sometimes leads people to compulsively seek patterns in random events, such as gambling, or to quickly assume a pattern exists based on inadequate information, such as one’s favorite team winning or losing based on whether one is carrying a good-luck charm. The need to find patterns also leads to imagining patterns before enough information is available to reasonably justify the patterns.

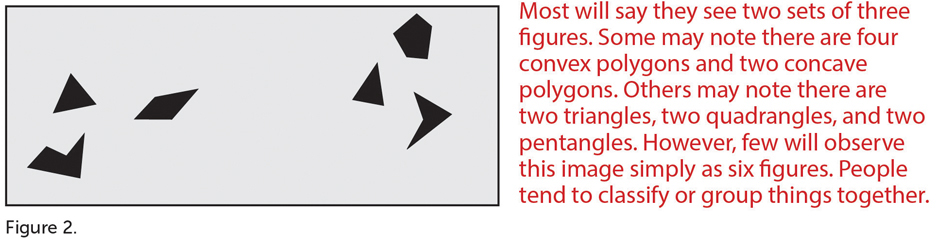

When people look at Figure 2, most will say they see two sets of three figures. Some may note there are four convex polygons and two concave polygons. Others may note there are two triangles, two quadrangles, and two pentangles. However, few will observe this image simply as six figures. People tend to classify or group things together.

Our tendency to classify and group leads us to finding similarities while often overweighting the correlation based on similarities. This tendency lends itself to biases and prejudices. Although we cannot divorce the mind from decision-making, we can become more aware of its vulnerabilities, especially those which can impact an actuary’s professional judgment or enhance our understanding of policyholders’ seemingly irrational behaviors.

Heuristics

A heuristic is a practical technique for problem solving or self-discovery that is satisfactory for the immediate goal. A heuristic is not necessarily optimal, perfect, or rational. Heuristics tend to speed up the process of finding a satisfactory solution and can be considered mental shortcuts that reduce the effort required by pure reason. When there is insufficient data or time to make a reasoned decision, we rely on our heuristics, which may be described as instinct, a gut feeling, an educated guess, common sense, or one of many other names for these mental shortcuts.

When something is urgent, we tend to apply heuristics in our decision-making process. When something is important, we tend to relegate the decision-making to the more effortful reasoning process.

Tibor Besedeš and his colleagues experimentally demonstrated that as people age, they increase their likelihood to use heuristics and make suboptimal decisions. Some argue this is due to diminished mental acuity; however, the age factor disappears when choices are presented in a person’s second language. From this one could conclude that when someone has a greater set of experiences upon which to call or recognize similarities, they are more likely to rely on heuristics.

Fortunately for our survival, heuristics tend to work; however, they are subject to biases that can be recognized and neutralized through reasoning. Let us consider some of the biases and advantages that could have actuarial or business implications.

Probability Distortion

Experimental evidence has demonstrated that people, including statisticians, are poor intuitive statisticians. The possibility of an event tends to create the mental construct of a probability skewed toward 50%. We tend to intuitively overweight low probabilities and underweight high probabilities. Although the chance of winning the Powerball jackpot is 1 in 292,201,338, in the mind of someone buying a ticket the imagined odds are often closer to 1 in 200,000, because the person imagines the possibility of winning, and the mind has a difficult time intuitively grasping the concept of such minuscule odds. The minutely possible becomes intuitively more probable. Similarly, the chance of loss of a house by flooding may be 1 in 200, but in the homeowner’s mind the imagined odds are likely to be closer to 1 in 20 or 1 in 50. Conversely, high probabilities are rarely accepted intuitively. We are inclined to dismiss a 95% chance of failure as being overstated or an erroneous result. This has been seen in the form of actuaries citing professional judgment as justification for declining to adjust reserves when statistical testing demonstrates failure at a reasonably high confidence level; e.g., a 99% likelihood of a set of X-factors being inadequate.

Another statistical distortion is the expectation of variance from the mean. When people were asked about the most likely distribution of the number of heads produced from five people flipping a fair coin six times each, they chose the combination of 3-2-4-3-3 as being more likely than 3-3-3-3-3. This despite the probability of all five having exactly three heads is over 77% more likely than the first option. We tend to expect some randomness, so we do not expect the expected to happen repeatedly.

Another way probability distortion comes into play is that humans tend to intuitively make large assumptions based on small sample sizes. From an intuitive perspective, this allows us to quickly adjust to current circumstances, which is good for survival in a rapidly changing environment; however, the bias can lead to poor long-term decisions. This has been evidenced by the studied behavior of retail investors in the stock market.

Immediacy

Our intuition tends to place more emphasis on what is urgent and leaves the determination of what is important to reason. Intuition’s focus on immediacy is a good survival mechanism. It makes rational sense to focus on one’s immediate well-being in most circumstances; however, being able to recognize what benefits one’s long-term well-being requires reason. The use of reason to defer gratification that is intuitively urgent is known to be one of the best indicators of future success. From a business perspective, the concern of a company becoming bankrupt in the next quarter would pose a significantly higher concern than of it being bankrupt in five years, which is a greater concern than it being bankrupt in 100 years. For publicly traded companies, quarterly earnings reports often produce disproportionately large moves in the company’s stock price.

From an actuarial or economic perspective, intuition uses a much higher discount rate for future outcomes than reason, but it is also riskier.

Intuition and Professional Judgment

During the Gulf War, a blip on a ship’s radar screen indicated something was traveling toward the fleet. Due to technical problems, the object’s altitude could not be determined. Pilots often turned off their aircraft’s identifier and radios during sorties and sometimes failed to re-establish them when returning to their carrier. Whatever was coming toward the fleet was traveling at about 700 miles per hour, which could be a friendly aircraft or a Silkworm missile. The commander had less than one minute to decide whether to fire Sea Dart missiles at the object. If he made the wrong decision, it would be the end of his naval career. He ordered the ship to “take it down.” He nervously waited in his quarters afterward to learn whether he had shot down an allied aircraft or saved a ship and the lives of the sailors on board. He was greatly relieved to learn the object had been a Silkworm missile. He could not explain how he made the decision and credited a gut feeling. Later analysis of the recording of the radar showed that due to the low altitude of the missile, it did not show up on the radar screen as soon as a higher-flying aircraft would have. The commander had seen so many aircraft on radar flying to the fleet that he could only recognize that something just did not seem right. His training had developed his intuition to lead him to the correct decision.

Sometimes we master tasks that initially require significant mental effort, such as learning how to drive an automobile or snow ski. As we become more proficient at these tasks, they are moved from reasoning activities to intuitive activities. We often refer to this as becoming second nature or developed reflexes. Similarly, when someone works in a specialty, their proficiency becomes intuitive. This is seen with chess masters, medical doctors, professional athletes, and actuaries. Experience in reasoning to analyze similar situations can lead us to intuitively sense what a result should be for a particular issue. This intuition is the recognition of past similarities and differences; moreover, it is something that cannot be fully described in language, so professional judgment is used to capture what is meant through reason or based on intuition rooted in extensive experience in the area of expertise. When intuition is used, however, we should be mindful of potential biases.

Anchoring and Theory Attachment

We tend to rely more on the first information we receive when making a decision. When asked to rate employees based on promptness, attention to detail, loyalty, and productivity, the initial trait rated tended to impact the ratings on other rates. When we first obtain information, our intuition begins to form a theory—and anchoring can evolve into theory attachment.

Here are two examples of anchoring:

- People were asked to write down the last two digits of their Social Security numbers and then asked to consider that two-digit number as the price in dollars for items such as chocolate, wine, or computer equipment. They then entered an auction; those with the highest two-digit numbers submitted bids many times higher than those with the lowest numbers.

- Teachers were given bundles of student essays to grade and a fictional list of the students’ previous grades. The average of the previous grades affected the grades the teachers awarded for each student’s essay.

We intuitively resist believing we are wrong. When invested in a theory, we attempt to fit the evidence available to support the theory. This is referred to as “confirmation bias.” The stronger we are invested in a belief, the more effort we put into fitting evidence to that belief, even to the point that contradictory evidence can increase the fervor with which a belief is held. A classic example of this was the resistance to Louis Pasteur’s attempts to disprove the commonly held theory of spontaneous generation. People believed living organisms came from nonliving matter; dirty rags and grain left in a dark room was believed to be the source of rats instead of rats coming from other rats. Because of his willingness to challenge a well-accepted theory, Pasteur is now quite famous for pasteurization in the United States and for his work with wine-producing yeast in France.

There is huge social, professional, and legal pressure to remain attached to accepted theories. Alexander Pope suggested prudence by stating, “Be not the first by whom the new is tried, nor the last to put the old aside.” It takes the courage of Galileo to question and oppose popular beliefs, but it is exactly this type of courage, questioning, and opposition that advance philosophy, arts, and the sciences. Unfortunately, as Galileo could attest, being correct is not always the most immediately rewarding approach.

Comparison

Despite Richard Dawkins’ reasoned argument for the selfish gene, altruism does exist and even benefits the larger gene pool. Humans intuitively feel empathy, sympathy, and compassion for other humans. As Charles Darwin noted, this helps keep diversity in the gene pool, which ultimately benefits the species. This desire for altruism has been cited as a possible reason a member of a fraternal society may be willing to pay higher premiums to the fraternal insurer than they might pay another company for a similar product.

In addition to altruism, humans have an intuitive desire for equal benefits when compared to others. This benefit fairness drive is primitive and has been seen in other mammals and even in some species of birds. Researchers rewarded two capuchin monkeys with cucumber slices for completing a task. The monkeys were quite content. However, when one of the monkeys was rewarded with grapes, the monkey being rewarded with cucumber slices began to protest by shaking his cage, slapping the floor and throwing the cucumber slices at the researcher. Actuaries often include competitor crediting rates in their models of dynamic lapse rates. This is an example of how fairness or comparison can be incorporated into actuarial modeling.

Focus

There is a famous experiment in which a short film is shown. There are two teams passing basketballs between themselves, one in white uniforms and one in black uniforms. The audience is told to silently count the number of times the team in white uniforms passes the basketballs and to ignore the team in the black uniforms. As the film proceeds, someone in a gorilla costume walks into the middle of the screen, beats their chest and exits. Due to the focus on the team in white and the effort to ignore the team in black, about half of the audience did not notice the gorilla and did not even believe they could have missed it. When the film is watched without concentration (or perhaps, distraction), the gorilla appearance is obvious. The experiment shows that we not only can be blind to the obvious, we can also be blind to our own blindness.

Representativeness

Consider the following question posed by Kahneman and Tversky, with Steve being a randomly selected individual from a representative sample:

Steve is very shy and withdrawn. He is very helpful but shows little interest in people or in the world in general. He is meek and tidy with a strong need for order and structure. He also has a passion for detail. Is Steve more likely a librarian or a farmer?

Most people immediately identify Steve as being a librarian, because he fits the mental image of a librarian. However, it rarely occurs to people that there are many more male farmers than male librarians and there are more male farmers who would meet Steve’s description than male librarians, so statistically Steve is more likely to be a farmer.

Fido barks and likes to chase cars. Is it more likely that Fido is a cat, a dog, or an entity in the universe?

The name and actions of Fido leads one to imagine a dog, so the intuitive answer is that Fido is a dog; but every dog is an entity in the universe, so Fido is more likely to be an entity in the universe than to be a dog.

Our tendency is to associate an object (entity or situation) with a similar representation, which tends to bring bias to our concept of the object—and is part of the reason humans, including statisticians, are poor intuitive statisticians.

Framing

The conceptualization of an idea and the personal traits of a decision-maker create a frame for a decision. When patients are told a treatment has a 90% chance of success, they are more likely to opt for the treatment than if they are told the treatment has a 10% chance of failure. Individuals are more likely to make timely payments if there is a penalty for late payment than if there is a discount for early payment, although the dollar amounts are the same. Framing is one of the largest biases in decision-making. It has been observed that it tends to increase with age, but curiously enough, it also tends to disappear when presented in a person’s second language.

While some have suggested framing increases with age due to reduced reasoning ability, the second language exception appears to discredit that well-held theory. An alternative hypothesis is that framing increases with age due to the increased experience of the subject.

Conclusions

While simple actuarial algorithms have often been shown to be at least as effective as trained technicians as diagnostic tools, the role of intuition and professional judgment will remain a major influence in actuarial practice. Understanding the potential intuitive biases from a cognitive science perspective can help us recognize and correct for the biases and improve our use of professional judgment.

To advance the actuarial profession in step with other fields, actuarial science will need to more fully integrate behavioral economics in its modeling and predictive analytics. Increasing our understanding of human heuristics, biases, and loss aversion will help us provide better products and more accurate predictive models as we integrate cognitive science into product development, marketing, financial modeling, and regulation within the insurance industry.

Finally, as a profession, we will need to apply our existing ethical guidelines for the applications of behavioral science; because ethics without business is broke, and business without ethics is bankrupt.

Randall A. Stevenson, MAAA, ASA, is a senior vice president and consulting actuary with Hause Actuarial Solutions in Overland Park, Kansas.