By R. Greg Szrama III

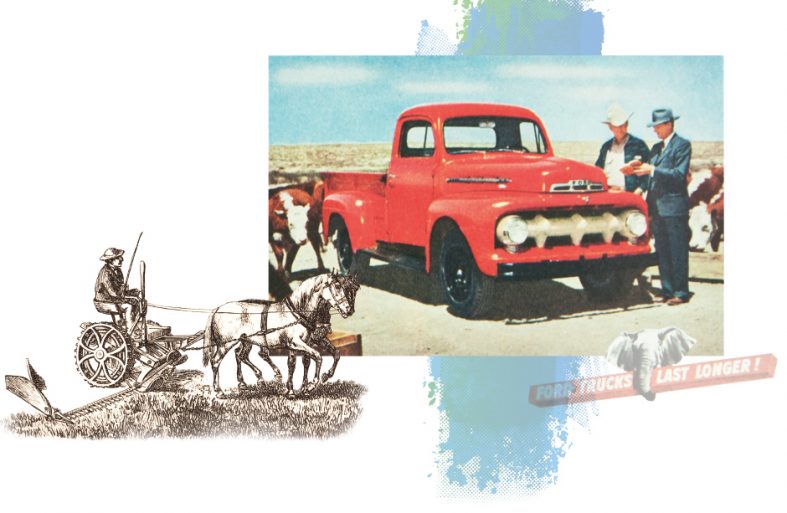

The world around us changes quickly. We’ve all seen those booklets that highlight how different the world felt when we were born—the cost of bread, a movie ticket, a car or a house, or the tuition at prominent universities. One change that often goes under the radar is the drastic shift in how we perform our jobs even as technology continues to transform our work patterns.The advent of the internal combustion engine provides a vivid example of how technology alters the shape of our workforce. Consider its impact on the agricultural industry alone. About 40% of all labor at the turn of the 20th century was farm-related; today approximately 2% of American labor is farm-related. Back then, farmers planted 90 million acres of corn for a yield of 2.7 billion bushels. Modern farms plant 85 million acres of corn for a yield of around 14 billion bushels.[1] The adoption of tractors and other mechanized agricultural equipment spurred much of this change.

Along with unbelievable gains in productivity, we’ve also witnessed a massive change in the leading causes of farming accidents. The tractors and other machinery that revolutionized crop production are now the leading cause of death among agricultural workers, according to the Centers for Disease Control and Prevention (CDC).[2]

This affects on-the-job safety requirements, the nature of injuries suffered by workers, and the sources of risk inherent to owning and operating a farm.

Even as we remember vivid examples of technological progress in the past, we can trust that continued technological progress will have similarly vivid effects in present and future work environments. Modern computing advances, powered in large part by artificial intelligence and cloud computing, hold the promise of disrupting any number of fields and eventually the entire labor force. Examples of these advances include robotic process automation, virtual reality, and computer vision. Per the World Economic Forum, accounting only for advances artificial intelligence we are on pace to displace up to 75 million workers by 2022 while creating around 133 million new roles.[3] This disruption makes it critical for us to understand what our work environments will look like as our global workforce adapts to these new roles.

Robotic Process Automation

First let’s consider Robotic Process Automation (RPA). The name is somewhat misleading as the only “robots” involved are software constructs built to mimic the work an employee normally performs. Put simply, RPA is the application of artificial intelligence software to automating routine tasks. In a sense these software robots, or bots, do for the back office what manufacturing robots have already done for the factory floor. Artificial intelligence allows these bots to perform complex tasks like voice and facial recognition and natural language processing (the technology that allows Alexa to respond to our every whim). Using artificial intelligence to understand how a user with little or no programming ability performs his work allows creation of complex custom-tailored bots unique to their specific tasks that may also be shared with others in similar roles.

Despite fears to the contrary firms adopting RPA technologies tend to deploy them to augment, rather than replace, their existing workforce.[4] The bots are typically trained by workers to automate their own routine tasks using the same software (such as web browsers and desktop applications) used by the worker. This type of automation pays huge dividends for businesses that are accumulating data faster than they’re developing the ability to process and derive value from it. Knowledge workers gain the freedom to focus on identifying trends and analyzing aggregate impacts while their personally trained bots perform routine tasks like moving data from one system to another or formatting reports. As recently explored in Contingencies,[5] many insurance companies are seeing great success with early adoption of RPA. As that article explains, insurers have a mix of legacy systems, complex workflows, and a customer experience focus that allows investment in RPA to provide great dividends.

The most immediate impact of deploying RPA is a reduction in human error. Just as factory automation reduces or eliminates human errors caused by fatigue or repetition, RPA bots play to a computer’s strengths in predictably repeating a task. Of course, with a reduction in human error comes a reduction in re-work as when we inevitably mis-apply a filter in a spreadsheet, or when we mix up two numbers while jotting down a note only to then re-enter it in a different software program. While not a cure-all for mistakes, RPA can be a significant tool in applying the Poka-yoke (mistake-proofing) concept from Lean Manufacturing to our office environments.

Another major benefit to RPA comes in handling routine regulatory and compliance matters.[6] Financial firms especially have regulatory-driven reporting requirements. Compliance with these reporting requirements is staff-intensive, requiring large teams employing a great number of manual processes. In the future it is entirely possible that regulatory teams will apply RPA to fully automate their reporting processes. As RPA matures and incorporates more advanced artificial intelligence, bots may be applied to more complex activities such as researching whether work products meet regulatory standards. Such a use case will allow compliance groups to move more quickly through their assessments and to reduce the time to market for nearly any product imaginable.

Virtual Reality and Virtual Presence

Now let’s consider virtual reality and the concept of virtual presence powered by it. The current applications of virtual reality skew heavily toward simulation and training. One classic (and pioneering) example is the U.S. military’s investment in cockpit trainers. These training simulators developed through the 1980s and ’90s into full electronic systems including enclosed canopies, projected screens, hydraulic motion actuators, and haptic feedback. Medical training provides another critical example of virtual reality usage, allowing simulations to supplement classic methods like cadaver dissection and operating room observation. Virtual medical simulations are also used by some surgery teams in preparation for complex surgeries. They model the patient’s unique physiology based on CAT scans and other medical imaging to more accurately plan their surgeries prior to physically treating the patient.[7]

Historically, the large upfront investments required in both computing resources and physical space kept virtual reality from wide professional use. New virtual reality companies like Oculus and HTC are reducing or eliminating these barriers with increasingly affordable consumer-grade hardware headsets. Google’s cardboard solution allows nearly anyone with an Android smartphone to experience high-fidelity virtual reality simulations. Advances in camera and image processing now allow inexpensive and rapid creation of photorealistic virtual scenes.[8] Modern graphics processors from Nvidia and AMD allow these scenes to be rendered accurately and at the extreme framerates required to reduce incidence of virtual reality sickness in end-users.

With the hardware hurdles largely solved, vendors are racing to find the right applications for virtual reality technology. The most likely candidates right now involve the concept of telepresence.[9] Stated simply, telepresence allows participation in events or control of machinery from a remote location. This is not a new concept—commercial interest in telepresence traces back to the TeleSuite platform in the early ’90s. Virtual presence is the application of virtual reality and related technologies to the same category of problems telepresence attempts to address.

The ultimate goal of virtual presence is to bring simulation participants into an environment where they forget they’re dealing with computer-generated representations of the other participants. Historical telepresence systems have been held back by latency issues or the plain awkwardness of interacting with them. Certain hospitals, for example, are piloting telepresence robots which consist of a video screen atop a machine that resembles a vague cross between a garbage can and a Roomba. In one notable instance, a physician delivered a terminal diagnosis to a patient via such a robot, to the horror of the patient and his daughter who was also present.[10] While this case illuminates a continued need for a human touch in delicate situations, virtual presence will provide for interaction on a more human level with people around the globe.

The immersive nature of virtual reality will help address this awkward interfacing between human and robot. New hardware systems such as Amazon’s augmented reality glasses will allow virtual reality simulations to overlay the real world. Instead of speaking with a robot, both participants will see true-to-life representations of the person they’re speaking with. Businesses already use two-dimensional video teleconferencing systems like Cisco’s Spark to allow for a sense of being in the same room despite physical separation. Applying virtual reality to these systems will grant users a sense of physical presence and allow for more natural interactions.

The real game-changer in terms of work environments, however, is in remote operation of robots and robotic vehicles. Currently fielded telepresence systems allow control of robots through monitors and control schemes including the use of keyboards and joysticks or video game controllers. While this works well enough for maneuvering drones and wheeled or tracked robots, these control schemes fall short when it comes to perceiving environmental conditions and controlling complex

robotic limbs.

Researchers at Brown University have seen great success applying common off-the-shelf virtual reality components to controlling robots remotely.[11] This application of virtual reality enables a level of situational awareness that’s simply not possible with two-dimensional interfaces. The Brown University experiment showed that benefits of virtual environments like improved depth perception, use of peripheral vision, and the freedom of simply turning your head to look around made the tasks intuitively simple for participants—tasks that would otherwise take intense training and practice to master through traditional control schemes.

The freedom and ease of access of virtual reality will allow skilled workers to remain in safe environments while still feeling present and effective in their duties. This has applications both in increasing the range of environments in which companies can safely operate as well as in improving the general safety of maintenance and repair operations in realms like boiler maintenance and agricultural machinery. Evolutions in this technology will also increase the number of tasks capable of being performed remotely, expanding telework opportunities far beyond the white-collar office workers who are the main current beneficiaries of these work arrangements. Advanced robots coupled with virtual reality control mechanisms will allow preventive maintenance specialists, for example, to work remotely, further increasing the safety of complex workplaces like factories and mills and greatly aiding in quality of life for the workers as well.

Computer Vision

Computer vision technologies, like virtual reality, have been around for quite some time but have seen tremendous progress in recent years. The goal of computer vision is to allow a computer to interpret an image or sequence of images in order to understand the physical world around it. As far back as the 1960s these systems could perform basic edge and shape detection. Building on that foundation, modern computer vision systems can identify unique animals or persons, distinguish between different facial expressions, identify body language, and estimate motion of objects in video sequences.

Arriving at this state has required use of complex artificial intelligence models.[12] To take a basic example, a human observer can readily identify the differences between 10 different chairs, noting distinctions like seat height, the presence or absence of arms, whether they are traditional dining chairs or swiveling desk chairs, etc. A computer must first be trained even to recognize an object is a chair; once the computer knows it’s seeing a char, it must also be trained to identify its functions. There’s no practical way to directly program a computer how to differentiate, for example, between an oversized loveseat and a small couch, much less intuitively differentiate between the worn seats of grandma’s dining room chairs to know which one she prefers.

Workplace applications of computer vision fall into a few broad categories including identifying people and events, automated inspection and organization, and environmental navigation. These all focus on processing visual stimuli that we humans process without thought, but in a way that can be automated and predictably applied on demand. Where these applications will have the biggest impact is in the way they intersect with other technologies, such as the robotic process automation and virtual reality technologies we’ve already examined.

Coupling computer vision with robotic process automation allows for more complex modelling of work processes. One notable example in practice is in processing visual information in reports where different authors adopt different color schemes, gradients, or layouts to present similar information.[13] In the future, more complex scenarios like species identification or even monitoring the operation of other robots through video feeds will be open for automation.

It’s in the area of autonomous vehicles, however, that computer vision holds some of the greatest potential. In 2018, 40% of all workplace fatalities were transportation-related.[14] In 2016, over 30% of workplace injuries were in the transportation segment.[15] These figures only accounts for worker injury and fatalities; in 2017, 683 large truck occupants died in crashes as opposed to 3,419 passenger vehicle occupant and pedestrian deaths from those accidents.[16] The transportation industry also suffers serious financial impact from ordinary traffic congestion. Per the Federal Highway Administration, the economic costs of congestion added up to over $6.5 billion annually.[17] These costs have cascading impacts across all areas of logistics and enterprise scale resource planning.

Autonomous vehicles, enabled by computer vision and advanced artificial intelligence processing, will do a great deal to address these costs. If human fatigue is removed from consideration, transportation operators will be able to schedule deliveries to avoid congestion while also greatly reducing the insurance costs of transporting goods. For the intermediate future this may take the form of assisted driving wherein a human is supplemented by an autopilot, just like we maintain human pilots in the cockpits of airplanes despite highly advanced autopilot systems. However, there’s no strict need for the human driver to be physically present in the driver’s seat. With virtual reality systems, a human driver might supervise from a safe remote location and even potentially direct multiple vehicles simultaneously. In the future, we might see trained operators leading convoys of automated trucks from any location, increasing the reliability of logistics networks while also alleviating a now-chronic shortage in professional drivers and operators.

The Future

Many of the innovations promised by robotic process automation, virtual reality, and computer vision are admittedly in their infancy. At present, RPA is probably the most widely used of these technologies in industry, and it is still relegated to specialized situations and is nowhere near universal adoption. Looking back at the personal computer, however, it took about 15 years to reach the point where businesses had to have them, and that was largely driven by the desire for email—a relatively mundane use case that we now find essential.

Once these technologies do mature and reach wide adoption, we can foresee shifts in industry at least as large as those powered by the internal combustion engine. The changes will start small with increased worker productivity and safety. From there, we’ll see reduced liabilities across the board, not just in transportation but in any occupation involving travel or dangerous workplaces.

Along with these changes, we must carefully watch for and analyze the unintended consequences of adoption. Virtual reality systems, for example, hold incredible potential as communications systems. Research into how the human brain reacts to virtual environments, however, is still in its infancy. Psychologists have already seen that the brain may be tricked into a state where the simulation becomes reality to the participant.[18] As the fidelity of virtual simulations advances, there’s no telling what physiological and psychological consequences might arise from extended use of these systems in a work environment.

Even so, just as the internal combustion engine transformed the dangers inherent to agricultural work, technology will continue to surprise us. We can look forward to increased profitability, reliability, safety, and, most importantly, more life-saving advances. As we get a handle on the risks we can look forward to an amazing future with more opportunity and increasingly personalized work environments.

GREG SZRAMA III is a software engineering manager at Accenture, where he focuses on high-throughput data processing applications. He is also a Google certified professional cloud architect.

References

[1] “2017 Letter to Berkshire Shareholders”; Berkshire Hathaway; Berkshire Hathaway website; February 2016. [2] “Agricultural Safety”; U.S. Centers for Disease Control; The National Institute for Occupational Safety and Health (NIOSH) website; April 12, 2018. [3] “Machines Will Do More Tasks Than Humans by 2025 but Robot Revolution Will Still Create 58 Million Net New Jobs in Next Five Years”; World Economic Forum; WEF website; September 17, 2018. [4] “What Knowledge Workers Stand to Gain from Automation”; Harvard Business Review; June 19, 2015 [5] “Robots Join the Team—Automation, transformation, and the future of actuarial work”; Contingencies; Jan/Feb 2018. [6] “Automation of regulatory reporting in banking and securities”; Deloitte; Deloitte website; 2017. [7] “Using VR to Simulate a Patient’s Exact Anatomy”; Stanford Health Care; Stanford Health Care website; Accessed October 15, 2019. [8] “Rapid creation of photorealistic virtual reality content with consumer depth cameras”; IEEE; IEEE XPlore website; April 6, 2017. [9] “The Trillion Dollar 3D Telepresence Gold Mine”; Forbes; November 20, 2017. [10] “A ‘robot’ doctor told a patient he was dying. It might not be the last time.”; Vox; March 13, 2019. [11] “Software enables robots to be controlled in virtual reality”; Brown University; Brown website; December 14, 2017. [12] “Computer Vision in Artificial Intelligence”; Oracle; Oracle Data Science Blog; January 10, 2019. [13] “KIIDs Recognition: a real case of computer vision application in RPA”; Information & Data Manager; IDM website; March 9, 2018. [14] “NATIONAL CENSUS OF FATAL OCCUPATIONAL INJURIES IN 2017”; U.S. Department of Labor; Bureau of Labor Statistics website; December 18, 2018. [15] “Nonfatal occupational injuries and illnesses resulting in days away from work in 2016”; U.S. Department of Labor; Bureau of Labor Statistics website; November 15, 2017. [16] “Fatality Facts 2017, Large trucks”; Insurance Institute for Highway Safety, Highway Loss Data Institute; IIHS website; December 2018. [17] “The Economic Costs of Freight Transportation”; U.S. Department of Transportation; Federal Highway Administration website; February 1, 2017. [18] “Model of Illusions and Virtual Reality”; Frontiers in Psychology; National Institutes of Health website; June 30, 2017.

The Human Consequences of Automation

The technologies discussed here all hold the potential of deep human impacts, both positive and negative. I had the opportunity to talk with Justin, who has personally experienced the disruption brought about by automation. He experienced downsizing when his job in telecommunications network engineering was automated by software. Now he employs the expertise he gained in administering and planning these networks to deploy artificial intelligence-powered self-organizing network capabilities that provide a greater level of automation in these networks. Justin also works with autonomous vehicles, applying open-source software to give autonomous capabilities to late-model cars using the cameras and other sensors the automakers install in these vehicles for their own safety systems.

One question I had for Justin is whether there are any hidden human costs associated with automation. Having been laid off as his job was automated, he quickly responded, “When you love your job, you’re really passionate about it.” He went on to explain how a passionate, motivated worker will look for his own efficiencies and his own ways of helping others do the work better. Automating jobs “removes the human element, and the human ability to pull together multiple disciplines to do the job better.” An automated system can only do what it’s trained to do and lacks the systemic knowledge a human has about their work.

We talked about his present work, where he deploys the same types of automation systems that cost him a job he cared about. He admitted to being deeply conflicted, knowing that people would lose their jobs even as he was successful at his. Justin went on to say, however, that companies chasing automation to stem financial losses risk winning the battle to lose the war. He feels that, in the long term, “Companies that succeed with automation will be those that value human effort and how automation supplements it. Companies that devalue their employees will see increasing losses as they lose their own ability to innovate in a complex marketplace.”

I asked Justin if there was anything else he’d like people to know about the benefits and risks of automation. He pointed out the classic Twilight Zone episode, “The Brain Center at Whipple’s,” where a factory owner replaces his entire workforce with robots but is then tortured and driven to insanity by malfunctioning machines before finally being replaced himself by a robot. He believes that ultimately the benefits of technologies like RPA and autonomous vehicles far outweigh the costs. Companies deploying these technologies properly will increase public safety and create a more dynamic, more exciting workplace. The risk lies in seeing employees as inconveniences rather than assets. Every worker replaced with automation costs a business institutional expertise and the potential for further innovation. The businesses that weather the upheaval caused by automation will be those that most successfully empower their people to be their best rather than replacing them wholesale.

—RGS

Robotics Control Systems

Robotic platforms can explore and manipulate environments that are too dangerous for a human to operate in. Bomb disposal robots, for example, are costly platforms—but losing a robot in an explosion is far preferable to a disposal technician losing a life. The most basic example is aptly named the Wheelbarrow; the earliest version was created from a literal wheelbarrow fitted with a device that disrupts the circuitry of explosive ordinance to disable it.

Controlling the movement of these robots is comparatively straightforward. Wheeled robots move forward, backward, and turn, just like our cars. Some can turn in place, especially if they’re on tracks instead of wheels. Where control schemes get challenging is when manufacturers give these robotic platforms the ability to interact with their environment.

Modern iterations of the Wheelbarrow robot, for example, incorporate a robotic arm that can wield many different attachments to facilitate bomb disposal. Unfortunately, robotic arms adds a great deal of complexity for the operator. A basic arm may have only a handful of joints, but these systems are constrained in the orientation they can grasp or interact with objects. To replicate the utility of a human arm, able to interact with any object within reach from any angle, a robotic arm needs six actuators. Think about your own arm—it can rotate at the shoulder, move forward or backward, up or down, bend at the elbow, tilt and twist at the wrist.

Control schemes for these arms often include banks of sliders or even video game controllers to try to gain a more natural feel for the arm. Software assistance is required to gain precise control and manipulation of objects in the environment. Users are also limited in the number of robotic parts they can manipulate at once; controlling the orientation and movement of the robot is often performed independently from controlling the capabilities of the robot. The most complex systems require multiple operators to gain full utility of the platform’s capabilities. Cameras on the robot are the only means of seeing the environment that the robot inhabits, which therefore affects the operator’s ability to perceive depth and scale of objects, further complicating the control of the platform’s capabilities.

—RGS