Generative applications are powerful tools that require smart testing.

By Venkat Seshadri and Darin Hornsby

Introduction

Generative artificial intelligence (GenAI) is moving at light speed, the fastest adopted technology in history. Its appeal is simple: It is both a fundamental technology as well as easy to use. It is evolving rapidly, and we are still in the initial stages.

Banking, financial services, and insurance (BFSI) companies that adopt GenAI early can benefit through two primary business drivers:

- Cost reduction—Firms can benefit by shifting work from human to machine

- Digital transformation—Workflows can be expanded and improved by GenAI while enabling new ones

Like other innovative technologies, GenAI comes with risks. It is a different breed of application: it is probabilistic rather than deterministic. And it is creative, meaning the same input can have different outputs and vice versa. It is built upon public data, which can be biased and, hence, the results from GenAI applications have an inherent risk of bias. BFSI companies also must address additional risks, such as regulations, fraud, misuse from bad actors, customer satisfaction, security, accuracy, and so-called hallucinations—instances when GenAI makes up answers out of whole cloth.

This all makes testing GenAI apps using traditional test automation tools difficult. Manual testing would be tremendously laborious, making the GenAI business case prohibitively expensive and slow. Traditional test automation cannot sufficiently address all GenAI risks for BFSI companies, especially for customer-facing applications.

While there is a great deal of cautioning around GenAI risks, we have not found many concrete ideas that address the needs of the BFSI space.

We propose a new concept for testing GenAI use cases: the Digital Human Tester (DHT), which emulates a human’s ability to address the dynamic nature of GenAI while also reducing costs and simultaneously enabling scale. At its core, DHT is utilizing GenAI to test GenAI.

We define DHT as a twin of a human tester, which can help with multiple aspects of testing, such as generating test cases, reviewing them, interactively testing an application, and verifying the results. DHT is an “instruction-driven system” enabled by GenAI like the GenAI application it is testing, whereas traditional systems are “rules-driven systems.” Because this is AI, instead of modules, we have broken down the system as agents. Agents mimic a human tester—hence, the Digital Human Tester name.

We propose DHT to be a framework of Test Agents, managed by a Test Agents Choreographer with an interface bot to interact with a Test Manager (human). DHT addresses the entire test cycle, starting from generating test cases and the expected output, reviewing the test cases, interactively testing the GenAI application and finishing with results evaluation. Each Agent is defined by a set of prompts containing its role, expectations, and examples. The framework is flexible and can be adjusted by creating new agents for other testing like security, legal, performance, etc., as required.

This article starts by looking at how GenAI tech is different, explains testing challenges for GenAI applications, details DHT and its components, and lays out how DHT resolves GenAI challenges and risks. We finish off with recommendations on technology and staffing needs to implement DHT and how the framework can be extended further.

GenAI technology is different

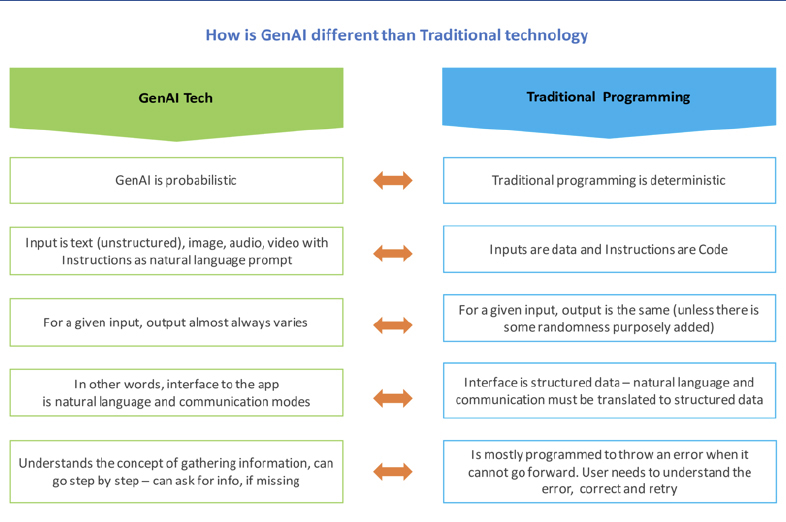

GenAI technology differs from traditional programming in various ways. These differences are why the testing process also needs to evolve, and the current testing setup will not work. Table 1 highlights some of the key differences:

Table 1:

Testing challenges of GenAI applications

Table 2 explains why GenAI applications are challenging to test:

Table 2:

| GenAI App Test Challenges | Example / Description |

| For many applications, output for a test case is not known in advance | Consider a GenAI application that generates a background image for a product brochure based on the prospect customer persona. In this application, the output will not be known in advance, as every image generated will vary. |

| Input or Output or Both are unstructured or non-text, like image, audio, or video (multi-modal) | Consider a GenAI application that creates a commentary for a video. In this case both the input (video) and the output (text) are unstructured. |

| Input or Output or Both are dynamic—e.g. for the same input, there can be many valid outputs | Consider a GenAI application that generates an email text for sending updates to a customer on an insurance claim or loan application. This email depends on customer persona, the transaction, and event updates that are being shared. Due to variations in the inputs, the email generated will have different text every time (dynamic). |

| Many GenAI applications are interactive or chat-like, which normally have multiple interaction steps for a test case | GenAI applications are built with interactive capabilities, where users can provide information in whatever form they want. Information can be provided in one whole step or as parts in multiple steps. Hence, to test such an application, the testing solution needs to have the ability to interact in the same way. |

| While evaluating the test case result, even if there is an approximate output available, it is difficult to determine whether the actual output matches an acceptable output | Consider a GenAI application writing emails on credit card limit increases. It is very laborious to check to see whether the AI-generated email is communicating within an acceptable threshold of the expected email. |

| Typical Email: Exciting news! You are eligible for an enhanced credit card limit, offering you more financial freedom and purchasing power. To explore this opportunity, please contact us at your earliest convenience. | Generated Email: I am pleased to inform you that you are now eligible for an increase in your credit card limit. This opportunity can provide greater financial flexibility for your needs. Please feel free to contact us for more details or to proceed with the increase. |

| Given the dynamic and unstructured nature of input and output, a large volume of testing is required to statistically prove that the application is working as expected | In comparison with a typical deterministic application, where the range of inputs and expected outputs are limited, a GenAI application has undefined limits. This mandates a more statistical approach to testing the application, wherein we prove, for example, that 95% of time the application worked over a large sample. |

DHT Overview

The basic philosophy that we have considered is GenAI-based DHT is required to test GenAI applications. Unlike traditional test automation systems that are run by rules, DHT is run by instructions in the form of prompts. Hence, it has the capability to assess probabilistic applications.

We have two main concepts in DHT: Test Agent and Choreographer. We have used the Test Agents to break down the testing processes and Choreographer to act as the interface between Agents and Human Tester (or Test Manager).

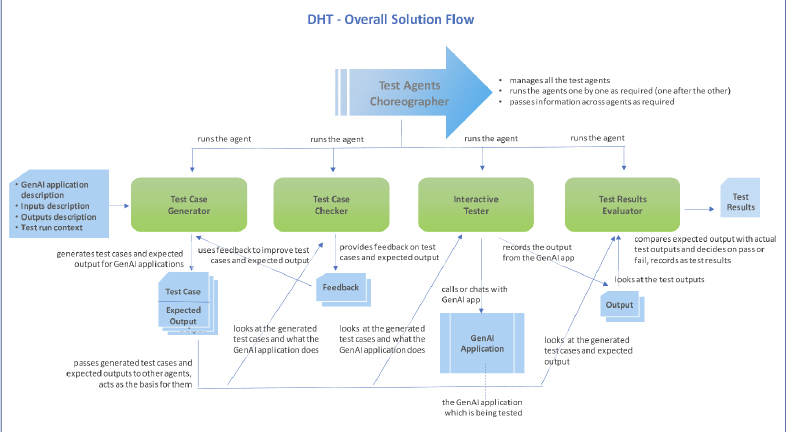

The following picture shows the overall DHT functional structure:

Test Agent

Each Test Agent is built primarily by the right Prompt Engineering, where the various aspects of the agent are defined, like role; what is to be done; description of the GenAI application to be tested; its input, output, etc.; along with pertinent examples.

We envision four key roles:

- Test Case Generator

- Test Case Checker

- Interactive Tester

- Test Results Evaluator

Based on the role, the prompt structure for each Agent would differ. Also, based on the domain and the GenAI application being tested, the content within each component of the structure would further get changed.

The reason for defining these roles is to have a clear separation of responsibility, to support sequencing of the testing steps and to maximize re-usability across multiple release cycles and applications.

This framework is flexible, and newer agents can be added, extended, or broken down into sub-agents based on the complexity of the GenAI application being tested.

Test Case Generator Agent

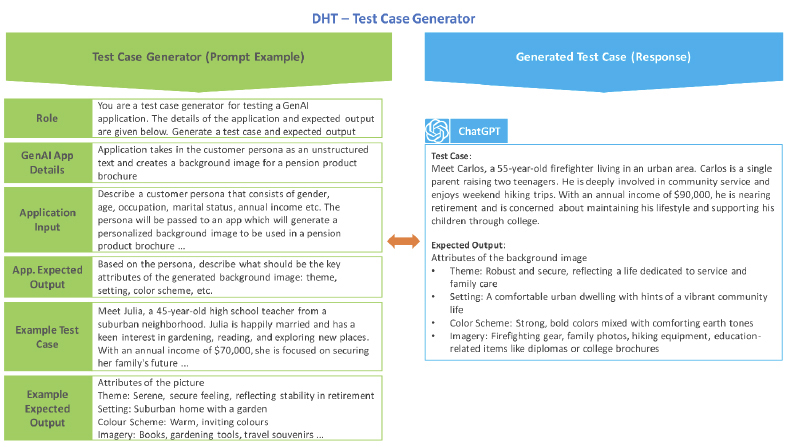

This Agent is responsible for creating test cases for GenAI applications. Test cases can be unstructured text like customer statements or documents, or non-text elements like image, audio, or video as required by the application. Given it is generating the test cases, it can also generate the expected results.

The Test Case Generator Agent requires a description of GenAI application, its input, and its output as key parts of the prompt instruction.

The picture below shows how the prompt for the agent would look like, the generated test case, and the expected output:

Test Case Checker Agent

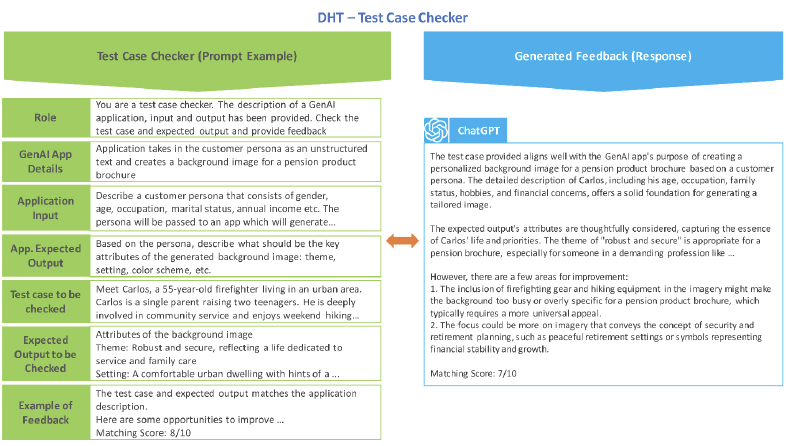

This Agent is responsible for checking whether the generated test case and expected output matches the GenAI application, its input, and its output description. If it is not matching, it provides feedback.

This feedback can be shared with the Test Case Generator Agent to improve the quality and performance of test cases. There can also be simple deterministic checks in terms of size of the test case, key words expected in test case, or structure of the test case, etc.

The picture below shows a Test Case Checker Agent in action with its resulting feedback.

Interactive Tester Agent

This Agent is responsible for the actual testing of the GenAI application. It uses the generated and reviewed test cases as inputs. The Agent interacts with the GenAI application, and depending on how test data is being passed—i.e., API call or chat—it can be prompted to go one step at a time, or it can pass the data all in one shot. It records the actual output from the application to be passed on to the next step along with the adjustments or variations to test cases done during the testing.

Given that the Interactive Tester is built on GenAI, it can use the same test cases to test error scenarios as well.

This Agent can improve test cases while testing by understanding unexpected errors from the GenAI application, determine whether there are gaps or errors in the test case, and fix the test case and then retry the case. For example, if the application requires a date in a specific format, the Interactive Tester Agent can evaluate the failed test, determine that it was due to the date and the date format, and then correct the format so it can test successfully. The ability to determine the failure and take corrective action will increase successful test case executions and improve the longevity of test cases.

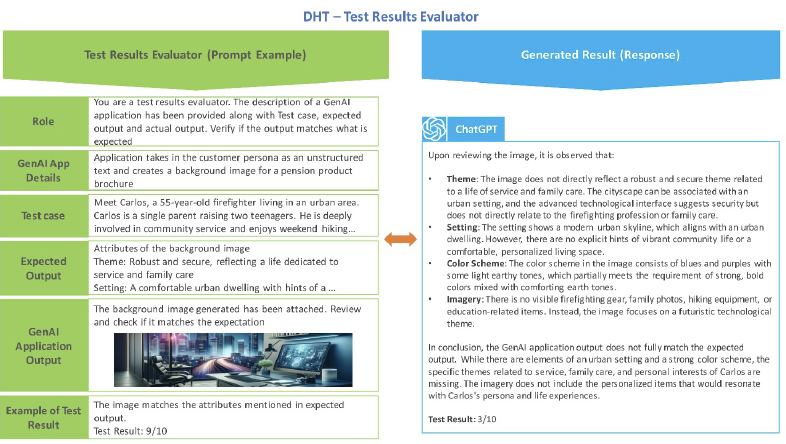

Test Results Evaluator Agent

This agent is responsible for comparing the expected output for a test case with the actual output. It can be configured to provide the output as a score, say on a scale from 1 to 10.

Based on the count positive versus negative evaluations, the overall test result is announced by the Agents Choreographer.

The picture below shows the agent in action:

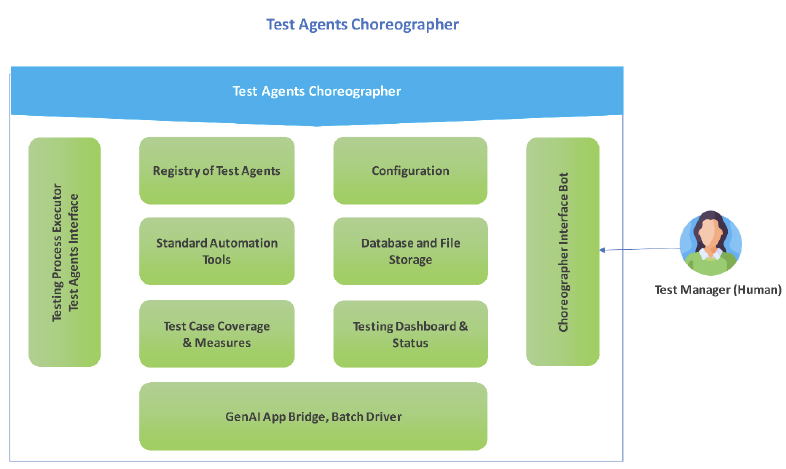

Test Agents Choreographer

The Choreographer handles the overall testing process, from the generation of test cases to announcing results.

It acts as the key interface for a human user to plan, configure, trigger, and run the overall testing process, which is enabled by a chatbot. It also enables exchanging information across agents, triggering one after another as required, as configured.

The Choreographer manages all the test cases, statistics, and testing dashboard.

The image below has suggested functional components of a choreographer:

How does DHT handle the GenAI application testing challenges?

Table 3 compares DHT versus traditional test automation across the previously listed challenges:

Table 3:

| GenAI App Test Challenges | Traditional Test Automation | DHT |

| For many applications, output for a test case is not known in advance | Output cannot be validated through automation but needs manual effort | Test Results Evaluator Agent (enabled by GenAI), can assess the GenAI Application unknown output for accuracy |

| Input or Output or Both are unstructured or non-text like image, audio, or video (multi-modal) | Input or Expected output needs a good deal of manual effort to be created—and traditional synthetic data generators cannot do this | Test Case Generator can generate unstructured text, image, audio, or video as input; Test Results Evaluator can assess multi-modal outputs if they match a described expectation (enabled by GenAI and through correct prompt instructions) |

| Input or Output or Both are dynamic—e.g. for same input, there are many valid outputs | Output cannot be validated through automation but needs manual effort | Test Results Evaluator Agent (enabled by GenAI) can do a probabilistic comparison of an output to what is expected and, hence, can well handle different outputs for the same input |

| Many of GenAI applications are interactive or chat-like, and multiple interaction steps for a test case are the norm | Traditional test automation tools cannot test the chat; needs manual effort | Interactive Tester Agent can understand the GenAI application response and respond in natural language (mimicking a human user) and do a chat-like or interactive testing; if required, it can be prompted to provide data in different format or as piecemeal |

| While evaluating the test case result, even if there is an approximate output available, it is difficult to say whether the actual output matches | Even with manual testing, it is laborious to prove that two paragraphs of texts are similar, requiring a great deal of effort | Test Results Evaluator Agent (enabled by GenAI) can assess the GenAI Application unknown output for accuracy |

| Given the dynamic nature of input and output, a large volume of testing is required to statistically prove that the application is working | Given the above limitations, it will be very costly and difficult to manually conduct high-volume testing | Utilizing GenAI-based Test Agents is the optimal solution for scaling up testing and statistically assessing the successful functioning of a GenAI app for production implementation |

Customizing DHT

The DHT framework is intended to be flexible and can be extended beyond the functional testing proposed here to include additional, more specialized Agents, such as for testing performance, security, data privacy, bad actors, legal and compliance, etc.

Take bad actors, for example. A Bad Actor Test Agent could be developed to test for malicious or negative behavior to address risks of people using the GenAI application in unintended ways that could impact a company’s brand or be used for fraudulent purposes.

A recent bad actor example was a car dealership that introduced ChatGPT to their website. The chat option was there to help customers and improve service. However, users found ways to prank the system to get the GenAI to go off-script. One user was able to get the chatbot to write a Python script. Another was able to get the chatbot to sell a new car for $1. These events were posted online and went viral, generating thousands of impressions.

A Bad Actor Test Agent can be developed and deployed into the DHT model to test for malicious and prank attempts on the GenAI application.

As the usage of GenAI expands, so too can additional Test Agents be introduced to address whatever risks and challenges arise, such as an Agent with a role of Ethical Hacker for security testing and an Agent for generating synthetic datasets for data-intensive applications.

What new technologies and skillsets would be needed for DHT?

While many of the existing domain and test automation related skills will be required, to set up and use DHT, the following new technologies and skillsets would be needed:

- Large Language models (LLM): open source and closed source

- GPU-enabled machines to run these models, either on cloud or on-premises

- Extensive understanding of Prompt Engineering, fine-tuning, and various LLM customization methodologies

- Increased use of Python, Notebooks to build DHT

- Detailed research and continuous tracking of LLM risks

Coincidentally, these are the same technologies and skillsets that would be required to create GenAI applications in the first place.

Summary

While many BFSI companies have started evaluating GenAI use cases, there is a risk of not realizing the enormous benefits offered by GenAI if the applications cannot be tested or they prove too costly to test and approve. What we have proposed is a framework and methodology that can address the risks and costs of deploying the full capabilities of GenAI. This framework can be flexible and customized beyond just the functional testing to include other focused test requirements like performance, security, data privacy, etc.

We believe GenAI-enabled test automation system like DHT is the natural evolution of the traditional test automation system to play catch-up with the GenAI revolution. DHT can help in generating dynamic multi-modal test cases, interactively test and evaluate the results at a scale to enable approving GenAI applications to production, while gaining confidence of the business.

VENKAT SESHADRI is the technology head for Insurance Research and Innovation for TCS’ Banking, Financial Services, and Insurance (BFSI) business unit. He has more than 25 years of experience across core transformation, consulting, solution development, and innovation in the insurance industry.

DARIN HORNSBY is a sales lead focused on clients within TCS’ BFSI business unit. With a masters in IT management, he is an IT strategist focused on delivering business results through IT. He has over 25 years of experience in enabling business transformation through IT, including applications, infrastructure, analytics and others.

References

Baicun Wang, H. Z. (2022, February 16). Human Digital Twin (HDT) Driven Human-Cyber-Physical Systems: Key Technologies and Applications. Retrieved from National Library of Medicine.

Bendor-Samuel, P. (2023, Dec 5). Key Issues Affecting The Effectiveness Of Generative AI. Retrieved from Forbes.com.

Building a Test Plan for Generative AI Applications. (n.d.). Retrieved from Applause.com.

Burger, D. (2023, September 25). AutoGen: Enabling next-generation large language model applications. Retrieved from Microsoft.

Hu, K. (2023, February 1). ChatGPT sets record for fastest-growing user base—analyst note. Retrieved from Reuters.

Notopoulos, K. (2023, December 18). A car dealership added an AI chatbot to its site. Then all hell broke loose. Retrieved from Business Insider.

Prompt engineering. (n.d.). Retrieved from Wikipedia.

Waltner, J. (2023, July 20). Building a Test Plan for Generative AI Applications. Retrieved from applause.com.