By Srivathsan Karanai Margan

Insurance companies would be wise to monitor this imminent tech.

Quantum computing is one of the most-anticipated technologies today. Built on the principles of quantum physics, quantum computers are predicted to be capable of processing massive and complex data sets and solving problems that are too complex for classical computers at exponentially faster speeds. While the superior processing power of quantum computing is expected to have an impact on a variety of industries, the insurance industry—which relies on the ability to process risk data for actuarial predictions and management—is especially expected to benefit.

This article covers the basics of quantum computing and how it could both benefit and challenge insurers. It further takes a rational view of the hype and articulates what insurers can do to face the impact.

The basics of quantum computing

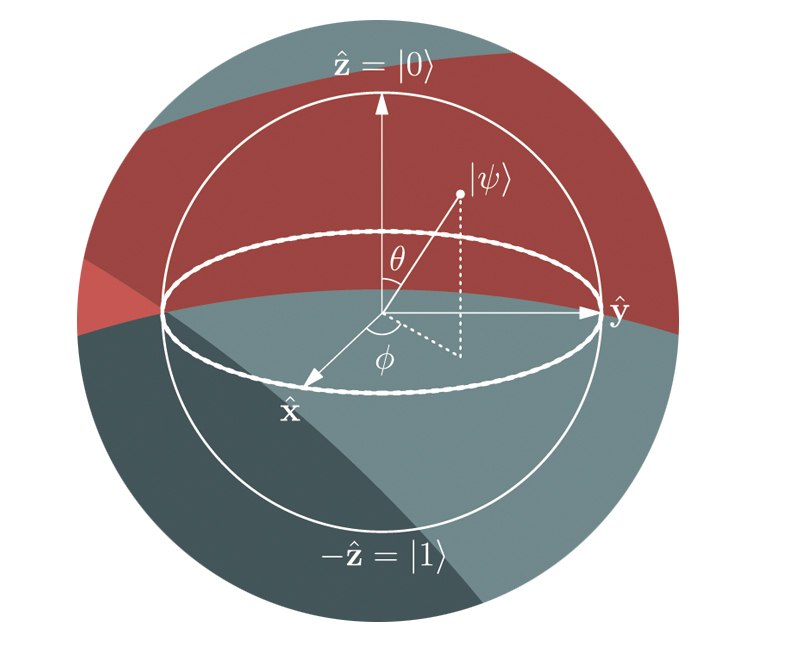

Quantum computers are built in a different way than the conventional “classical” computers that we are all familiar with. The classical computer is governed by the laws of classical physics and is made up of electronic components such as transistors and capacitors. Quantum computers, on the other hand, lie at the intersection of physics, computer science, and mathematics and harness the laws of quantum physics. The basic unit of information in a classical computer is known as a “bit” and is represented by definite binary states of 0 or 1. As opposed to this, the unit of information in quantum computers is a “quantum bit” or “qubit,” which can exist in multiple states at the same time. While the classical bits are deterministic, qubits are inherently probabilistic.

Currently, experts are following several approaches to build quantum computers by using different subatomic particles such as superconducting electrons, ions, and photons. Superposition and entanglement are two important properties of qubits that enable quantum computers to process and store data in exponentially less time than a classical computer. Superposition is the property of a qubit to simultaneously represent values that could be 0, 1, or any other value in between. It allows quantum computers to perform many calculations in parallel. Entanglement is another property of a qubit that allows it to connect to other qubits and act as a group even if they are physically separated. It allows faster communication between qubits, which is essential for certain quantum algorithms.

Quantum supremacy and quantum advantage are two terms that are discussed when comparing the superlative performance of quantum computers with classical computers. Quantum supremacy refers to the point at which a quantum computer can effectively solve a problem that cannot be solved with conventional computers in a feasible amount of time. Quantum advantage, on the other hand, refers to the point at which quantum computers can realize a significant performance advantage over classical computers in performing some useful real-world tasks. As of now, the views of experts differ on whether quantum supremacy has been achieved, but they are unanimous in saying that quantum advantage may still be a few years away.

The faster processing capabilities of quantum computers can be applied to use cases across four categories.

- Quantum simulation: To perform in-silico simulations of complex challenges with a large number of variables at unprecedented speed

- Quantum optimization and search: To optimize complex systems to find the most efficient path and search through large data sets more efficiently

- Quantum machine learning: The use of quantum systems to sift through large swaths of structured and unstructured data at very high processing speeds, find data correlations, and extract learning

- Quantum cryptography: The use of quantum mechanics to conduct rapid integer factoring and encryption so the data is secure from being hacked or intercepted

These use cases of quantum computing have the power to revolutionize a wide range of industries and fields such as drug discovery and design, material discovery, aerospace design, polymer design, big data search, supply chain optimization, and finance. Quantum computing will be exceptionally superior to classical computing for solving problems that have multiple elements and variables, require considering a larger number of possible interaction combinations, and have multiple possible outcomes. With an increase in the possible combinations, the complexity of these problems would increase exponentially, making them intractable and beyond the ability of classical computers to solve.

The sweet spots in insurance

In insurance, quantum computing could find application in both the risk and investment segments of the business. The risk space comprises activities such as risk profiling, modeling, portfolio optimization, assessment, prediction, pricing, and management. The investment space comprises activities such as portfolio allocation and optimization, trading optimization, hedging positions, asset price prediction, derivatives pricing, options pricing, and optimized currency arbitrage. The activities on both the investment and the risk segments have always been data-driven. At the core of risk management lies the insurer’s ability to build various risk models that consider several probable risk scenarios and constraints to assess the present portfolio and extrapolate it to foresee how it will behave in the future.

Insurers and other financial institutions mostly prefer the Monte Carlo method to analyze and predict the probability of different outcomes of complex probabilistic events using random variables. This method is used to understand the impact of risk and uncertainty in prediction and forecasting. Using randomness to solve problems gives this method a significant advantage over other models that use fixed inputs. While simulating, the model assigns a random value to the variable to generate a result. This process is then iteratively repeated by assigning different values to the selected variable. After the simulation is complete, the final estimate is arrived at by averaging all the results. When run on classical computers, processing the iterations consumes a lot of time. Besides, due to the inherent complexity, classical computers can handle only a limited number of variables to perform these iterations. The killer advantage that a quantum computer can bring to this process is that, due to superposition, qubits can assume multiple random values simultaneously and thus decrease the computation time to near-instantaneous.

The insurance industry is now at an inflection point. The traditional risk models that insurers perfected over several decades are being challenged by two indomitable forces: the emergence of connected data and an increase in risk severity. The proliferation of connected devices generates a continuous deluge of risk-related data. While this information influx could immensely help insurers manage risk, the existing risk models have not yet been upgraded to incorporate such a continuous flow of risk data. In addition, insurers are facing headwinds from increasing risk severities and unprecedented combinatorial complexities of risks such as climate, geopolitics, and cyber risk. Put simply, the traditional risk models are becoming ineffective, which creates an urgent need to revitalize them with new data and changing risk dynamics. Quantum computers will be able to process large datasets to identify new risk patterns and optimize the risk management function much faster. Insurers will be able to simulate the performance of their asset and risk portfolios and optimize them with the best possible allocation after considering the additional data and risk scenarios. As the complexities in the financial and risk markets increase, the massive computational power will enable insurers to consider more variables and to perform such assessments more frequently.

Insurers could benefit from the ability of quantum algorithms to model complex climate events, weather scenarios, and catastrophe risks. Quantum algorithms could analyze vast customer data to accurately target customers based on their preferences and risk behaviors to offer hyper-targeted insurance products and service recommendations. Another space where quantum computers could benefit is in analyzing datasets to find patterns of fraud. The presence of fraudulent transactions in any dataset is normally lower in comparison to clean transactions. This makes it difficult for the machine learning models run on classical computers to effectively find all the patterns of fraud. However, quantum machine learning algorithms will be able to identify the patterns of fraud in such skewed and imbalanced datasets.

The sour spot of quantum decryption

While the massive processing power of quantum computers can benefit insurers in a variety of ways, they have the potential to pose a significant threat to cybersecurity. When we perform any online transaction, cryptographic algorithms protect our privacy, secure data, and keep us safe. These algorithms rely on prime factors of large numbers that will take classical computers an insurmountably large number of years to break. But quantum computing algorithms could break these encryption protocols in a comparatively insignificant period of time. This poses a very serious risk to a wide array of internet services and further amplifies the cyber-risk landscape. Cyberattacks can manifest in two forms: real-time and retroactive. The quantum computing algorithms prevalent today do not have the processing power to break the widely used public-key cryptography techniques. Hence, the possibility of a real-time quantum decryption attack is not expected to happen anytime soon. The risk of a real-time attack in the future could be mitigated by adopting post-quantum cryptographic algorithms as they continue to evolve.

Retroactive attacks, also known as “hack now, decrypt later” (HNDL) attacks, should be of immediate concern for everyone. In a retroactive attack, the hacker gains access to the encrypted data today and waits patiently until the advanced quantum computing algorithms to decrypt it are available to unleash the intended harm. The adoption of quantum-secure solutions in the future may not be of much relief, as the data is already compromised. Though retroactive attack sounds scary, the actual risk from it is determined by the following three factors:

- Data expiry date: Every piece of data has an expiry date beyond which it ceases to have any relevance or value. This date varies depending on the type of data and the purpose for which it was created. For example, while transactional data like that of credit card usage has value for a very short period, data pertaining to permanent life insurance or annuity contracts has value for longer periods. On the other hand, data comprising personally identifiable information (PII), protected health information (PHI), classified government data, and trade secrets are highly sensitive and seldom lose value.

- Availability of quantum algorithms: The quantum algorithms that can decrypt the existing encryptions will be available sometime in the future. Hence, if the data that has been hacked now will expire before the quantum algorithms are available, there is no need to be worried. However, if the data have longer expiry dates and will continue to be active by the time these algorithms become available, then it is something to be worried about.

- Availability of post-quantum cryptography: As quantum computing evolves, post-quantum cryptography, also called quantum-resistant cryptography, will also evolve. These encryption algorithms are based on extremely hard mathematical problems that are difficult for both classical and quantum computers to solve in any feasible time. The National Institute of Standards and Technology is already working to identify such quantum-resistant encryption standards. If the industries progressively adopt these standards, then the ongoing risk could be well mitigated.

Insurers must handle two different challenges when it comes to quantum cryptography. The first challenge is to secure the data held by them, which includes personal, financial, health, and other sensitive data of customers. To prevent retroactive attacks, insurers need to build quantum resilience to mitigate the impact of a cyberattack. To start with, they will have to create an inventory of all their data, analyze how long it needs to be secured, and create an appropriate action plan. They must become crypto-agile and adopt quantum-resistant encryption standards as they evolve. The second challenge is to determine the risk impact of a retroactive attack to which they are already vulnerable or exposed to, based on the cyber-risks covered. Insurers need to engage with their customers to ensure that they build quantum resilience—and at the same time revisit their cyber coverages, premium rates, underwriting procedures, and terms of the contract—to adopt preventive risk mitigation plans.

Looking through the quantum hype

Despite all the hype, quantum computing has not been able to demonstrate an insurmountable superiority over classical supercomputers, also known as high-performance computing (HPC), while performing quantum simulation and optimization. This is largely attributed to the shortcomings in hardware. The physical qubits are imperfect, unstable, and extremely sensitive to the environment. They need to operate in an environment where there is no atmospheric pressure, the temperature is close to absolute zero, and they are insulated from the earth’s magnetic field. A slight change in the environment could cause the qubits to decohere, which destroys the quantum properties of superposition and entanglement, thus resulting in erroneous computation results.

Developing a fault-tolerant quantum computer still remains a major challenge, as it requires thousands of imperfect physical qubits to create one error-corrected logical qubit. As of now, companies have been successful in creating quantum computers with a few hundred physical qubits. Notwithstanding the fact that we are still in a noisy, intermediate-state quantum (NISQ) era that is error-prone, the views of experts differ on whether quantum computers could be of value to businesses before becoming fully fault-tolerant. While some experts opine that fault-tolerant quantum computing is essential to creating significant business value, others believe that fault-tolerance is not a binary state but a continuum. Hence, they assert that potential quantum computing business use cases can continue to evolve even during the existing pre-fault-tolerant phase.

Any discussion on quantum computers will be incomplete without mentioning supercomputers for two reasons. One, the performance of quantum computers is always compared against that of supercomputers, and two, quantum computers are also currently being explored to solve almost the same set of problems for which supercomputers are used. Supercomputers are classical computers in which a very large number of computer processors are arranged in a grid or cluster configuration. The multiple processors in a supercomputer work together to perform the calculations in parallel and provide a singular answer much faster than a single processor. A critical area of research in quantum computing is to distinguish between problems that require the accuracy of supercomputers, those that quantum computers can solve at significantly faster speeds, and those that are intractable and can only be solved by quantum computers. Given the different objectives of quantum and classical computers, it will be a mistake to believe that quantum computers will entirely replace classical computers.

The fields of quantum computing and classical computing are simultaneously growing. Over the years, the performance of supercomputers—which is measured in floating-point operations per second (FLOPS)—has seen tremendous improvement. We already have exascale computers that could achieve over a quintillion FLOPS per second. The use of supercomputers was traditionally restricted to government and research and development due to their prohibitive costs, the complexity related to building and maintaining them, and their limited practical necessity. Over the past decade, supercomputing vendor companies have begun to offer HPC-as-a-service (HPCaaS) solutions, thus democratizing supercomputing by delivering high-power processing capabilities on the cloud. Instead of purchasing or leasing expensive hardware or software, companies can work with supercomputing vendor companies to configure the infrastructure they need for their specific needs and avail the services on a pay-as-you-go basis. However, in spite of this reduced entry barrier, the adoption of supercomputing by insurers has been underwhelming. Only a few large insurance and reinsurance companies use it for risk assessment and underwriting. Given this situation, it could be presumed that the insurance industry will naturally prefer to go slow in experimenting with or adopting a technology that is unregulated and still evolving.

The path ahead

We need to keep in mind that all the current debate and skepticism regarding the efficacy of quantum computers is based on what is seen in the market today, and not what is yet to come. Quantum computing companies are continuously investing in overcoming the hardware impediments. While working on increasing the number of qubits a machine is built with, they are also working on improving the quality of qubits. They have been able to show progress on both fronts by building machines with a few hundred physical qubits. Though it is extremely difficult to predict the exact timeframe, it can be asserted that over the next few decades, quantum computing is likely to grow manifold. It will evolve through the pre-fault-tolerant era marked by increasing numbers of physical qubits into the future with several thousands of error-corrected logical qubits. At this point, quantum computing will unleash unprecedented processing power to push all computational boundaries and solve problems previously thought to be intractable.

Regardless of the progress, classical computers will still be required to provide input and receive the output from the quantum computers. This hybrid classical-quantum computing model is likely to become the design in which quantum computers are used as accelerators for classical supercomputers to perform complicated processes. Similar to the supercomputing capabilities being offered on the cloud as HPCaaS, quantum computing vendor companies are starting to offer capabilities of quantum computing as a service (QCaaS). While this will democratize access to exceptional processing power, on the flip side, bad actors and adversaries could gain cheaper access to quantum cryptography to hack existing systems. Many governments and regulatory bodies are assessing the impact of quantum computing and working on soft and hard laws to plan, prepare, and budget for an efficient transition to quantum-resistant protocols, algorithms, and systems to ensure continued protection.

With the tectonic changes that quantum computing could drive in the computing and risk landscape, insurance companies cannot afford to follow a wait-and-watch approach. They must begin to develop a strategy for quantum computing that addresses two issues: how to adopt and leverage the power of quantum computing in processes, and how to anticipate the risks that might arise because of it. To do both of these, insurers will have to stay continuously updated on how this field is evolving. They should closely follow the core research and the governing laws and regulations that are shaping up.

To embrace quantum computing, insurers will have to evaluate their use cases and the applicability of quantum computing to solve their problems. These use cases will continue to evolve alongside the progress of quantum computers from the pre-fault-tolerant to the fault-tolerant era. Insurers need to assess whether quantum computers provide significantly faster and more accurate results than classical computers. They should distinguish between problems that require the power of quantum computing and those that can be solved with traditional supercomputers.

To preempt the risks emanating from quantum computers, insurers must become quantum-agile and progressively embrace quantum-resistant protocols, systems, and algorithms. They need to be wary of the risks posed by both real-time and retroactive attacks. The emerging technologies from the fourth industrial revolution are creating a cyber-physical network in which physical and software systems are intertwined. The systems are capable of making decisions and operating independently. Hence, it could be a misjudgment to associate quantum cryptography attacks only with hacking, data theft, and ransomware risks and presume that the risks could be contained just by restricting their cyber-risk exposures—the risks could manifest in a variety of cyber-physical manifestations as well.

While quantum computing must overcome a lot of challenges before it becomes mainstream, insurers need to start collaborating with various stakeholders to prepare for the future. As of now, a very few large insurance and reinsurance companies are exploring the applicability of quantum computing. It is possible that some commercially viable use cases are realized within a decade. Insurers must start investing in the hybrid classical-quantum strategy to develop the expertise needed to realize benefits when the breakthrough occurs. Given the potential consequences, the hazard of ignorance and/or inaction could prove to be a significant risk on its own.

SRIVATHSAN KARANAI MARGAN works as an insurance domain consultant at Tata Consultancy Services Limited.

References

Butterfield, K.F., & Sarkar, A. (2022). Quantum Computing Governance Principles. World Economic Forum. https://www.weforum.org/reports/quantum-computing-governance-principles

IBM Institute for Business Value. (2021). The Quantum Decade – A playbook for achieving awareness, readiness, and advantage. https://www.ibm.com/downloads/cas/J25G35OK

Meige, A., Eagar, R., & Könnecke, L. (2022). Quantum computing, Arthur D. Little. https://www.adlittle.com/sg-en/insights/viewpoints/quantum-computing

Meige, A., Eagar, R., & Könnecke, L. (2022). Unleashing the business potential of quantum computing, Arthur D. Little. https://www.adlittle.com/en/insights/report/unleashing-business-potential-quantum-computing