By R. Greg Szrama III

The name itself sounds cynical—Zero Trust. It evokes an image of the X-Files informant Deep Throat telling Mulder to “Trust no one.” Sure enough, the most secure computer is one that is unplugged and turned off. Unfortunately, this means that computer is also not very effective; it is devoid of purpose. The thought follows, if we trust no one, what useful work can we get done?

This question lies at the heart of a great deal of confusion surrounding the zero trust-model, even among those implementing it. The National Institute of Standards and Technology (NIST) definition only helps a little in clearing up this confusion:

Zero trust is a set of cybersecurity principles used to create a strategy that focuses on moving network defenses from wide, static network perimeters to focusing more narrowly on subjects, enterprise assets (i.e., devices, infrastructure components, applications, virtual and cloud components), and individual or small groups of resources.

So, how does this practically help us—and why are we talking about it in the first place?

The Challenges of Traditional Cyber Security

The classic metaphor for cyber security is a castle. The most secure castles employed multiple layers of defenses to protect wealth or important persons, often with a moat, multiple walls, watchtowers, gates, and numerous other features. Following this metaphor, we distinguish devices and users as to whether they’re “inside” the castle and trustable or “outside” the castle and risky.

As with guarding a traditional castle, the defender’s dilemma is the need to succeed 100% of the time while an attacker only needs to succeed once. In truth, the attacker controls the engagement as a castle (or a computer network in our case) is a fixed installation. Necessity forces the defenders into a reactionary posture, trying both to anticipate and to counter all incoming attacks. Like any fortress, we can never consider an electronic network fully secure; breaches will happen. As with other security disciplines, the goal evolves into slowing the attacker down. We distract attackers with honeypots and compartmentalize assets so that the inevitable breaches are contained.

This dilemma leads to a stalemate between customers and users needing flexibility to do business, security professionals protecting data and assets, and attackers looking for any weakness to exploit. As businesses develop new methods of using and sharing data, more access points are opened in our networks. More access points lead to more points of attack. More points of attack lead to novel defensive techniques, but these also risk limiting the functionality of those new business processes.

At first, simple firewalls—specialized servers used to ensure “outside” computers have only restricted access to “inside” computers—provided sufficient defense. This worked so long as we could readily identify traffic and all communication was constrained to a few dedicated avenues, such as serving up webpages or receiving a feed of financial market data. Increases in interconnectivity and the rapid expansion of communication technologies, however, carried with them increases in the complexity of communications traffic being monitored. This specific security limitation burst into the public consciousness with Web 2.0 and the numerous breaches of credit card numbers and other personal data.

Security professionals quickly developed new approaches, such as proactive intrusion detection systems (IDSs) which aim to identify malicious activity using automated techniques. As the technology to identify and respond to breaches matured, so did the nature of the attacks. The best detection software can do nothing about social engineering attacks where attackers manipulate personnel into revealing personal information or passwords. This forced an additional focus on educating the workforce as the most pernicious cyber security threats are not always technical in nature.

Amid this back-and-forth between network defenders and attackers, user needs continued to evolve as well. Businesses are keen to streamline and to operationalize internet-of-things systems to track and manage inventory, to interact with remote sensor networks, to manage fleets of vehicles or drones, and many other industry specific use cases. Workers continue to move toward remote work arrangements and to clamor for bring-your-own-device policies. Cloud adoption is accelerating and carries with it an increased reliance on a wide range of new service models, including software-, platform-, and infrastructure-as-a-service.

Each of these trends require increased interaction between devices “inside” and “outside” the trusted network to ensure full functionality. In many cases, especially those involving cloud services, entire networks of devices exist in no definable physical location. These services directly power our modern quality of life. They allow you to purchase new shoes on Amazon from your phone, to stream Netflix to the device of your choice, to access digital assistants like Siri or Alexa, to reach your destination safely with Google Maps, and to maintain quarantines while ordering your groceries through Instacart. They also render the traditional idea of a closed, protected corporate network entirely untenable.

A New (Old) Way of Thinking

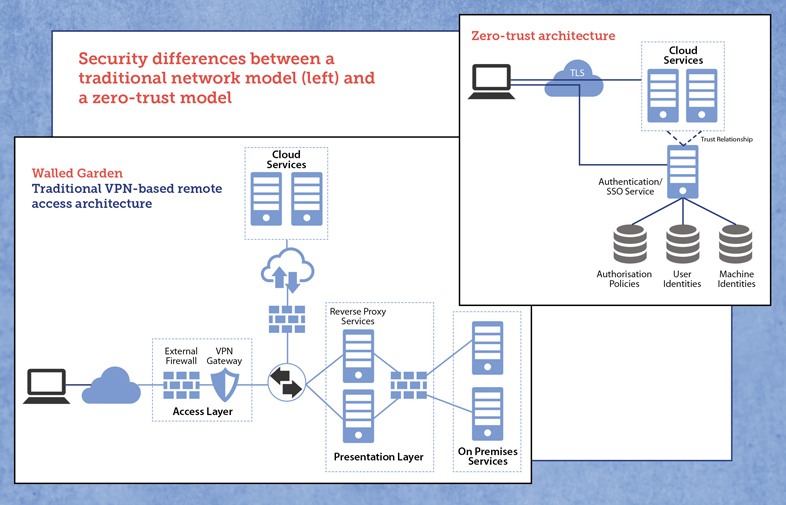

The key concept behind zero trust is that networks are no longer split between an “inside” and an “outside” zone. Devices and users are no longer implicitly trusted. Under the classic paradigm, a trusted insider with access to the corporate network had few practical impediments to compromising it. Having physical presence mattered. This approach to cybersecurity enabled too many breaches to count. Modern computing needs have rendered the classic approach obsolete just as modern defensive strategies have moved away from city and castle walls.

This methodology, also called perimeterless security, is not strictly a new concept. The Jericho Forum began addressing de-perimeterisation in 2003 and published the Jericho Forum Commandments in 2006. They aimed to codify new thinking about cyber security into an actionable roadmap for organizations to use in forward planning. Google deployed BeyondCorp, a zero-trust network, in 2009. Still, it took another nine years before NIST would publish SP 800-27, Zero Trust Architecture. Following close after, the United Kingdom National Cyber Security Centre recommended in 2019 that all new IT deployments should consider zero-trust principles, especially those using cloud services.

When we drop the distinction between “inside” and “outside” the network, we need new approaches to securing data and services. A network firewall is still beneficial for providing basic network protection (defense in depth is still valuable), but we also need to design each system and service with the capability of protecting itself. The approach to cyber security shifts from securing whole networks to tailoring security to individual assets. This has the added benefit of enabling customized access to a protected resource based on the sensitivity of that resource. For example, a company may have websites or web applications it wants to make accessible only to employees within a certain geographic area. Under this model, the web server itself would enforce an access policy that checks the location of the user before granting access.

The name notwithstanding, trust ironically becomes more critical in these configurations. The key, however, is that trust is established between parties each and every time a transaction occurs. A device requesting data must trust the service provider just as much as the service provider must trust the device. While the overall network layout may become simpler, the zero- trust model complicates each transaction within that network. This leads to one of the primary drawbacks of zero-trust approaches—they can increase the time required to complete transactions. This generally does not impact the usability of a system but can present concerns for complex or time-sensitive applications, especially applications spanning hybrid or multi-cloud systems.

How Does This Achieve Security?

The classic defense approach is fatally flawed. We can never prove a network is secure because we can never prove the absence of vulnerabilities (just remember back to symbolic logic and trying to prove a negative such as, “no unicorns exist”). Attackers assume corporate networks have standard layered security measures in place like firewalls, multi-factor authentication, monitoring and encryption, etc. Consequently, they look for asymmetrical weaknesses, especially those involving compromising people.

Zero-trust architectures circumvent this struggle by assuming that perimeter-based defense will never achieve security on its own. Achieving security through this new paradigm requires fundamental changes to how we conceptualize computer systems.

One example of this shift in thinking is the way we view securing data. In the past, we tended to view data security as a separate concern from the data itself. The database server would encrypt data and provide access controls, but once you gained access (through your account or through a database vulnerability) you had some measure of trust to view and manipulate data. The Office of Personnel Management (OPM) hack in 2015 is an excellent case study in this. Attackers believed to be sponsored by the Chinese government gained access to the credentials of a trusted insider and leveraged that into breaching over 22 million records, including sensitive records related to the security clearance process.

Under a mature zero-trust paradigm, data itself is tagged with attributes describing who can access it. Each data element, then, is aware of the preconditions for viewing it and a database would prevent any data access that does not meet those requirements. While this approach may not have prevented the OPM incident on its own, it would at minimum have reduced its impact and increased the opportunities for detection. Validating a need to know on each data transaction creates a clear audit trail and incorporates the principle of least privilege in a fundamental way that is difficult or impossible to circumvent.

How Does Zero Trust Support Organizational Missions?

In a survey of chief information security officers (CISOs) in 2015, the Rand Corporation noted, “It was striking how frequently reputation was cited by CISOs as a prime cause for cybersecurity spending, as opposed to protecting actual intellectual property.” Interconnected systems require a certain level of trust from users and/or the general public to realize their benefits. Multiple studies underline the risk to organizations of data breaches, including that up to 87% of consumers will take business to a competitor if they feel their data is not handled responsibly.

The need for data security bumps up against organizational goals constantly. Cloud computing is often billed as the answer to all connectivity and computing needs, but it also carries potential risk as organizations no longer directly control their physical computing infrastructure. Incorporating zero-trust principles helps organizations to safely and securely realize the benefits of these new platforms.

Take the growth of “predict & prevent” models of insuring as an example. McKinsey & Company predicts that by 2030 this model will be ubiquitous among consumer and business policies. These models are built on a constant stream of data from connected devices. AI-based analytics and decisioning platforms can already provide real-time feedback from these data streams to reduce risk and prevent accidents before they happen.

The interconnected networks of devices necessary to power these tools are impractical in a traditional perimeter-based security approach. Trying to stream data from hundreds of thousands or even millions of connected devices to a traditional data center will overwhelm all but the costliest internet connections. Cloud presence is critical to realizing this scale. Devices need the ability to freely connect with one another in a secure fashion, but traditional firewalls and intrusion detection systems create the need for a constantly growing number of customized rules allowing those connections. Most importantly, the traditional methods of securing customer data set up organizations for further data breaches.

Adopting zero trust as a foundational organizational principle ensures that networks and data in the future are secure by design. Data stored in an insurer’s network might be tagged for the insured user, with time-controlled and audited access to that data. The insurer might build tools that ensure the insured user can securely view the audit records and know exactly who has accessed their data and why.

Zero trust also pairs seamlessly with other trust systems, such as distributed ledgers (blockchains). A key tenet of the Jericho Forum’s Commandments is transparency in trust among all parties to a transaction. The obligations of each party must be known and understood. Blockchain-based smart contracts can not only create an audit trail of these obligations but can even enforce them automatically. Time bounds, for example, might be placed on accessing data, with lockout triggered automatically once the authorized period has expired.

Avoiding the Next SolarWinds Attack

Maybe the most direct application of adopting zero-trust principles, and enforcing them, is in the provision and maintenance of cyber insurance. In trying to underwrite organizations for their exposure to data breaches, an awareness of zero-trust security can provide a large differentiator in policies.

The SolarWinds attack highlighted the need for both zero-trust principles and cyber insurance in general. In 2020, alleged Russian hackers placed malicious code in an update for the Orion system used by over 33,000 customers. This created openings in the networks of most of the Fortune 500, including Microsoft, Cisco, Intel, and Deloitte, in addition to numerous U.S. state and federal agencies. The attack went undetected for almost six months during which additional malware was installed on these networks.

Weak internal security contributed to the severity of the attack, where compromising one computer hosting the Orion software allowed a jumping point to infect and compromise other computer systems. Zero-trust principles are one method of reducing or eliminating the threat of these types of breaches. With proper compartmentalization in place and strict access controls, attackers may compromise one system, such as Orion, but that limits the scope of their access to that one system.

Moving Forward to Zero Trust

The fallout of the SolarWinds attack continues to be long-lasting and severe. A Gallagher report highlights some of those consequences, especially as it relates to the insurance industry. They report broad changes in the policies covering extortion and ransomware, increases in deductibles and premiums across the board, and new exclusions for nation-state attacks, just to name a few. This attack targeted the IT Supply Chain and created broad disruptions in services that also impacted insurers who were themselves compromised. Adopting a “trust but verify” approach, as zero trust is often described, will help mitigate or even eliminate the effectiveness of these types of attacks. Earning the trust of our clients demands nothing less.

R. GREG SZRAMA III is a software engineering manager at Accenture, where he focuses on high-throughput data processing applications.

Internet of Things

The internet of things, or IoT, is an umbrella term describing physical objects with embedded computing power and with the capability of exchanging information over the public internet or other computing networks. These embedded devices include microprocessors and software and can operate independently of one another. They may also include various sensors, cameras, microphones, and other necessary components. Common applications of IoT devices include smart home capabilities for thermostats and appliances, vehicles with telemetry and infotainment systems, inventory management for manufacturing and warehousing, and remote sensing networks to monitor air, soil, or water quality. In 2017, Gartner estimated there were 8.4 billion connected “things” on the internet, with a trajectory toward 20.4 billion “things” by 2020. According to Statista, there are approximately 5 billion people using the internet on a given day. This gives us a ratio of at least four IoT devices per person on the internet. With the number of devices growing rapidly, securing this data and maintaining public accountability is essential.

Improving the Nation’s Cybersecurity

On May 7, 2021, cyber criminals, using password details found on the dark web, launched a ransomware attack on the Colonial Pipeline, the largest pipeline for refined petroleum products in the United States. The attack targeted payments systems but operators shut down the pipeline out of concern the perpetrators could have breached other systems. In order to alleviate supply issues, President Biden declared a state of emergency on May 9, allowing suppliers to exceed limits on transport via other methods including road and rail. Three days later, on May 12, the president signed Executive Order 14028, titled Improving the Nation’s Cybersecurity. The Colonial Pipeline attack was not the only impetus for this order, but it did underscore the depth of vulnerability in critical infrastructure to cyber disruption.

The EO directly addresses the need for zero-trust security in the federal government. Section three, subtitled Modernizing Federal Government Cybersecurity, requires that within 60 days the head of each agency shall, among other things:

…develop a plan to implement Zero Trust Architecture, which shall incorporate, as appropriate, the migration steps that the National Institute of Standards and Technology (NIST) within the Department of Commerce has outlined in standards and guidance, describe any such steps that have already been completed, identify activities that will have the most immediate security impact, and include a schedule to implement them…

The EO also leaves unambiguous that the Cybersecurity and Infrastructure Security Agency, a part of the Department of Homeland Security, “shall modernize its current cybersecurity programs, services, and capabilities to be fully functional with cloud-computing environments with Zero Trust Architecture.”

While the EO is targeted primarily at the federal government, including its contractors and suppliers, industry will also benefit from the new guidelines and standards produced. NIST is at the forefront of drafting these guidelines. That body was charged with defining, among other things, criteria for evaluating software security and the practices of developers and suppliers. The order also directed NIST to establish two new labeling regimes focused on internet-of-things devices and consumer software. The goal of these requirements is to encourage manufacturers to produce, and purchasers to be informed about, the cybersecurity risks and capabilities of these products.

The Colonial Pipeline incident is safely behind us. The FBI has even recovered a portion of the ransom payment—63.7 bitcoin of the 75 originally paid. The pipeline is securely flowing again, and the supply crunch caused specifically by this incident was resolved. We avoided a worst-case scenario this time. And now regulators and industry finally seem aware the threat is not just theoretical.

The 2020 United States Federal Government Data Breach

In 2020, a wide-ranging cyber-attack compromised multiple governmental, international, and private organizations. Victims included NATO, the U.K. government, the EU parliament, the U.S. Treasury Department, the U.S. Department of Commerce, and many more. This attack targeted products from three key IT vendors—Microsoft, SolarWinds, and VMWare. The FBI identified the Foreign Intelligence Service of the Russian Federation (SVR) as instigators of the attack.

While the attackers employed multiple attack vectors to compromise their victims, both Microsoft and SolarWinds products were subject to a flavor of exploit called a supply chain attack. The goal of such an attack is to compromise a hardware or software asset at the manufacturing or distribution points of the supply chain network.

In the case of the Microsoft exploits, the attackers compromised a reseller of Microsoft cloud services, allowing access to services used by the reseller’s customers. In the case of SolarWinds, the attackers breached software publishing infrastructure used to provide updates to the Orion software used for network monitoring. They planted remote access Trojans in multiple updates to Orion. Though they reportedly only

accessed a small fraction of the successful malware deployments, potential targets included most of the Fortune 500 companies, as well as many, many governmental agencies.

The breach of SolarWinds was missed by the U.S. Cyber Command and was instead found by FireEye, a private cybersecurity firm that founds its own systems were breached. The attack was sophisticated and long planned, with exceptional care given to avoiding detection. It used a combination of previously undisclosed “zero day” exploits and manipulation of trusted accounts to access, among other things, senior Treasury Department email accounts.

This attack underscored two critical weaknesses in IT systems. The first is that we put a lot of trust in software suppliers to create secure platforms. We also share a lot of information with these vendors to arrive at the right mix of products, but this risks exposure when the vendor is compromised. The second key lesson, related to the first, is we cannot implicitly trust a piece of software on our network. The primary exposure from the SolarWinds attack did not come from the Orion product itself. It came from other systems on the network that attackers managed to exploit.